Development of a method for changing the surface properties of a three-dimensional user avatar

DOI:

https://doi.org/10.15587/2706-5448.2023.277933Keywords:

digital face, game engine, three-dimensional face modelling, digital avatar, semi-realistic avatarAbstract

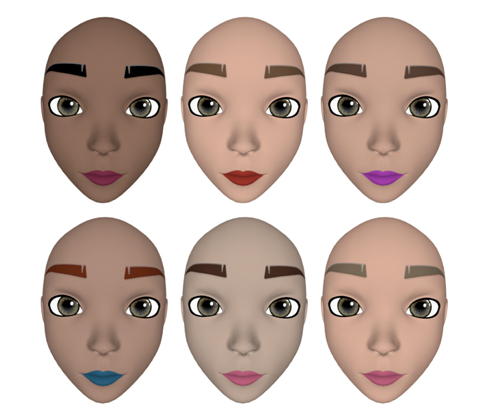

The object of study of this research paper is the processes of changing the properties of three-dimensional surfaces of a user avatar in real time. In the course of this work, the research addressed the limitations of existing solutions for synthesizing three-dimensional user avatars, particularly in terms of realism and personalization on mobile devices. Furthermore, the study tackled the challenge of efficiently adjusting color attributes without compromising the underlying texture information, ultimately enhancing user experience across various applications such as gaming, virtual reality, and social media platforms. A method consisting of three key components is proposed: pre-designed 3D models, multi-layer texturing, and software and hardware implementation. The multilayer texturing approach includes different texture maps, such as diffuse and occlusion maps, which contributes to the smooth integration of texture attributes and the overall realism of 3D avatars. The real-time change of surface properties is achieved by mixing the diffusion map with other texture maps using the Metal hardware accelerator, allowing users to efficiently adjust the color attributes of their 3D avatars while preserving the underlying texture information. The paper presents a software algorithm that uses the SceneKit game engine and the Metal framework for rendering 3D avatars on iOS devices. The result of the developed method and tool is a mobile application for the iOS platform that allows users to modify a digital 3D avatar by changing the model's colors. The paper presents the results of testing the proposed methods, means and developed application and compares them with existing solutions in the industry. The developed method can be implemented in areas such as gaming, virtual reality, video conferencing, and social media platforms, offering greater personalization and a more immersive user experience.

References

- Wu, Q., Zhang, J., Lai, Y.-K., Zheng, J., Cai, J. (2018). Alive Caricature from 2D to 3D. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 7336–7345. doi: https://doi.org/10.1109/cvpr.2018.00766

- Cai, H., Guo, Y., Peng, Z., Zhang, J. (2021). Landmark Detection and 3D Face Reconstruction for Caricature using a Nonlinear Parametric Model. Graphical Models, 115, 101103. doi: https://doi.org/10.1016/j.gmod.2021.101103

- Egger, B., Smith, W. A. P., Tewari, A., Wuhrer, S., Zollhoefer, M., Beeler, T. et al. (2020). 3D Morphable Face Models – Past, Present, and Future. ACM Transactions on Graphics, 39 (5), 1–38. doi: https://doi.org/10.1145/3395208

- Li, M., Huang, B., Tian, G. (2022). A comprehensive survey on 3D face recognition methods. Engineering Applications of Artificial Intelligence, 110, 104669. doi: https://doi.org/10.1016/j.engappai.2022.104669

- Sang, S., Zhi, T., Song, G., Liu, M., Lai, C., Liu, J. et al. (2022). AgileAvatar: Stylized 3D Avatar Creation via Cascaded Domain Bridging. SIGGRAPH Asia 2022 Conference Papers. doi: https://doi.org/10.1145/3550469.3555402

- Tiwari, H., Subramanian, V. K., Chen, Y.-S. (2022). Real-time self-supervised achromatic face colorization. The Visual Computer. doi: https://doi.org/10.1007/s00371-022-02746-1

- Xu, S., Yang, J., Chen, D., Wen, F., Deng, Y., Jia, Y., Tong, X. (2020). Deep 3D Portrait From a Single Image. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 7707–7717. doi: https://doi.org/10.1109/cvpr42600.2020.00773

- Yang, H., Zhu, H., Wang, Y., Huang, M., Shen, Q., Yang, R., Cao, X. (2020). FaceScape: A Large-Scale High Quality 3D Face Dataset and Detailed Riggable 3D Face Prediction. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 598–607. doi: https://doi.org/10.1109/cvpr42600.2020.00068

- Ye, Z., Xia, M., Sun, Y., Yi, R., Yu, M., Zhang, J. et al. (2023). 3D-CariGAN: An End-to-End Solution to 3D Caricature Generation From Normal Face Photos. IEEE Transactions on Visualization and Computer Graphics, 29 (4), 2203–2210. doi: https://doi.org/10.1109/tvcg.2021.3126659

- Zhang, R., Zhu, J.-Y., Isola, P., Geng, X., Lin, A. S., Yu, T., Efros, A. A. (2017). Real-time user-guided image colorization with learned deep priors. ACM Transactions on Graphics, 36 (4), 1–11. doi: https://doi.org/10.1145/3072959.3073703

- Ostrovka, D., Teslyuk, V. (2023). Synthesis model of a three-dimensional image of a user based on the SceneKit game engine and the USDZ format for IOS. Scientific Bulletin of UNFU, 33 (1), 89–94. doi: https://doi.org/10.36930/40330112

- Ostrovka, D., Teslyuk, V. (2023). A method for dynamically changing the geometry of 3D surfaces in USDZ format with further implementation in the SceneKit game engine. Scientific Bulletin of UNFU, 33 (2).

- Gui, J., Zhang, Y., Li, S. (2016). Realistic 3D Facial Wrinkles Simulation Based on Tessellation. 2016 9th International Symposium on Computational Intelligence and Design (ISCID). Hangzhou, 250–254. doi: https://doi.org/10.1109/iscid.2016.1064

- Abdallah, Y., Abdelhamid, A., Elarif, T., Salem, A.-B. M. (2015). Comparison between OpenGL ES and metal API in medical volume visualisation. 2015 IEEE Seventh International Conference on Intelligent Computing and Information Systems (ICICIS). Cairo, 156–160. doi: https://doi.org/10.1109/intelcis.2015.7397213

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Dmytro Ostrovka, Vasyl Teslyuk

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.