Model development of dynamic receptive field for remote sensing imageries

DOI:

https://doi.org/10.15587/2706-5448.2025.323698Keywords:

receptive fields, convolutional neural networks, Swin Transformers, remote sensing, scene localization, semantic segmentationAbstract

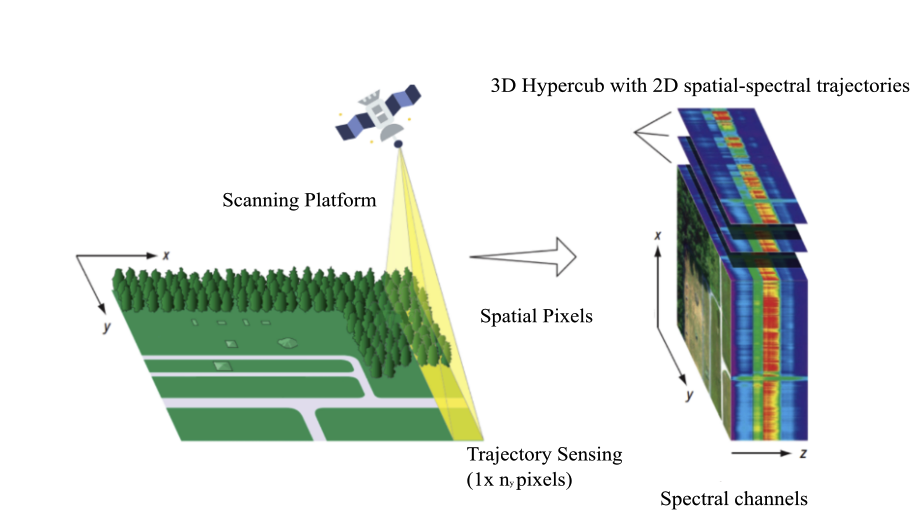

The object of research is the integration of a dynamic receptive field attention module (DReAM) into Swin Transformers to enhance scene localization and semantic segmentation for high-resolution remote sensing imagery. The study focuses on developing a model that dynamically adjusts its receptive field and integrates attention mechanisms to enhance multi-scale feature extraction in high-resolution remote sensing data.

Traditional approaches, particularly convolutional neural networks (CNNs), suffer from fixed receptive fields, which hinder their ability to capture both fine details and long-range dependencies in large-scale remote sensing images. This limitation reduces the effectiveness of conventional models in handling spatially complex and multi-scale objects, leading to inaccuracies in object segmentation and scene interpretation.

The DReAM-CAN model incorporates a dynamic receptive field scaling mechanism and a composite attention framework that combines CNN-based feature extraction with Swin Transformer self-attention. This approach enables the model to dynamically adjust its receptive field, efficiently process objects of various sizes, and better capture both local textures and global scene context. As a result, the model significantly improves segmentation accuracy and spatial adaptability in remote sensing imagery.

These results are explained by the model’s ability to dynamically modify receptive fields based on scene complexity and object distribution. The self-attention mechanism further optimizes feature extraction by selectively enhancing relevant spatial dependencies, mitigating noise, and refining segmentation boundaries. The hybrid CNN-Transformer architecture ensures an optimal balance between computational efficiency and accuracy.

The DReAM-CAN model is particularly applicable in high-resolution satellite and aerial imagery analysis, making it useful for environmental monitoring, land-use classification, forestry assessment, precision agriculture, and disaster impact analysis. Its ability to adapt to different scales and spatial complexities makes it ideal for real-time and large-scale remote sensing tasks that require precise scene localization and segmentation.

References

- Pushkarenko, Y., Zaslavskyi, V. (2024). Research on the state of areas in Ukraine affected by military actions based on remote sensing data and deep learning architectures. Radioelectronic and Computer Systems, 2024 (2), 5–18. https://doi.org/10.32620/reks.2024.2.01

- Li, W., Liu, H., Wang, Y., Li, Z., Jia, Y., Gui, G. (2019). Deep Learning-Based Classification Methods for Remote Sensing Images in Urban Built-Up Areas. IEEE Access, 7, 36274–36284. https://doi.org/10.1109/access.2019.2903127

- Wenjie, L., Li, Y., Urtasun, R., Zemel, R. (2016). Understanding the effective receptive field in deep convolutional neural networks. 29th Conference on Neural Information Processing Systems. https://doi.org/10.48550/arXiv.1701.04128

- Dai, J., Qi, H., Xiong, Y., Li, Y., Zhang, G., Hu, H., Wei, Y. (2017). Deformable Convolutional Networks. 2017 IEEE International Conference on Computer Vision (ICCV), 764–773. https://doi.org/10.1109/iccv.2017.89

- Jensen, J. R. (2015). Introductory Digital Image Processing: A Remote Sensing Perspective. Upper Saddle River: Prentice-Hall.

- Yu, X., Lu, D., Jiang, X., Li, G., Chen, Y., Li, D., Chen, E. (2020). Examining the Roles of Spectral, Spatial, and Topographic Features in Improving Land-Cover and Forest Classifications in a Subtropical Region. Remote Sensing, 12 (18), 2907. https://doi.org/10.3390/rs12182907

- Blaschke, T., Strobl, J. (2001). What’s Wrong with Pixels? Some Recent Developments Interfacing Remote Sensing and GIS. Proceedings of GIS-Zeitschrift Fur Geoinformationssysteme, 14 (6), 12–17.

- Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T. et al. (2020). An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. International Conference on Learning Representations (ICLR). https://doi.org/10.48550/arXiv.2010.11929

- Xie, E., Wang, W., Yu, Z., Anandkumar, A., Alvarez, J. M., Luo, P. (2021). SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. Advances in Neural Information Processing Systems, 34, 12077–12090. https://doi.org/10.48550/arXiv.2105.15203

- Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z. et al. (2021). Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 9992–10002. https://doi.org/10.1109/iccv48922.2021.00986

- You, J., Zhang, R., Lee, J. (2021). A Deep Learning-Based Generalized System for Detecting Pine Wilt Disease Using RGB-Based UAV Images. Remote Sensing, 14 (1), 150. https://doi.org/10.3390/rs14010150

- Wang, W., Xie, E., Li, X., Fan, D.-P., Song, K., Liang, D. et al. (2022). PVT v2: Improved baselines with Pyramid Vision Transformer. Computational Visual Media, 8 (3), 415–424. https://doi.org/10.1007/s41095-022-0274-8

- Zhao, H., Shi, J., Qi, X., Wang, X., Jia, J. (2017). Pyramid Scene Parsing Network. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2881–2890. https://doi.org/10.1109/cvpr.2017.660

- Chen, L.-C., Papandreou, G., Schroff, F., Hartwig, A. (2017). Rethinking Atrous Convolution for Semantic Image Segmentation. https://doi.org/10.48550/arXiv.1706.05587

- Strudel, R., Garcia, R., Laptev, I., Schmid, C. (2021). Segmenter: Transformer for Semantic Segmentation. 2021 IEEE/CVF International Conference on Computer Vision (ICCV), 7262–7272. https://doi.org/10.1109/iccv48922.2021.00717

- Lin, T.-Y., Dollar, P., Girshick, R., He, K., Hariharan, B., Belongie, S. (2017). Feature Pyramid Networks for Object Detection. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 936–944. https://doi.org/10.1109/cvpr.2017.106

- Melamed, D., Cameron, J., Chen, Z., Blue, R., Morrone, P., Hoogs, A., Clipp, B. (2022). xFBD: Focused Building Damage Dataset and Analysis. https://doi.org/10.48550/arXiv.2212.13876

- DOTA dataset. Available at: https://captain-whu.github.io/DOTA/dataset.html

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Yurii Pushkarenko, Volodymyr Zaslavskyi

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.