Development of a rule-based LLM prompting method for high-accuracy event-schema evolution

DOI:

https://doi.org/10.15587/2706-5448.2025.342365Keywords:

data evolution, decision support, event sourcing, large language modelsAbstract

The object of this research is the process of selecting an architectural strategy for event-schema evolution in event-sourcing systems. This process involves complex architectural trade-offs and is a critical task for maintaining the integrity and long-term viability of the immutable event log.

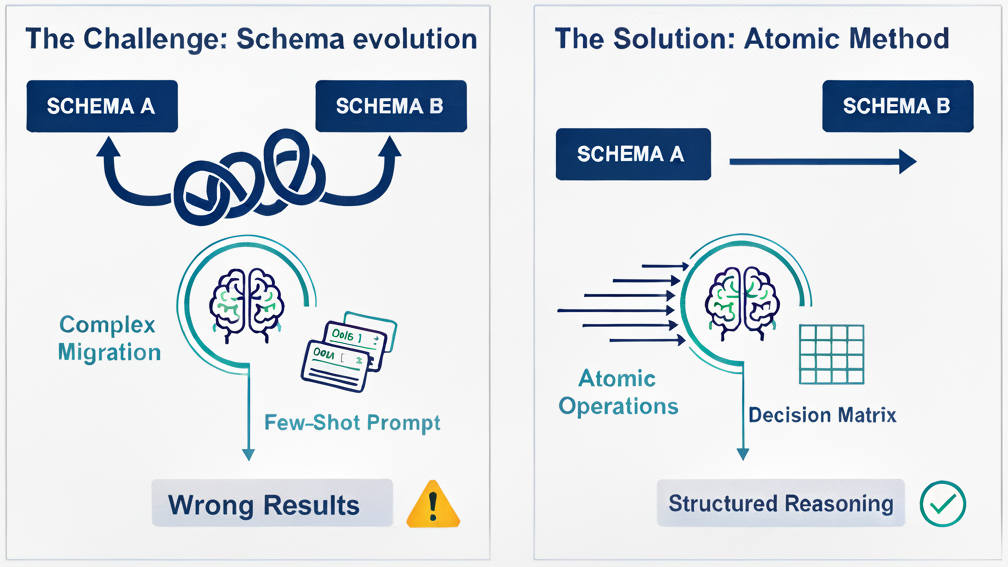

The addressed problem is the inconsistent performance and reliability ceiling of standard LLM prompting techniques like few-shot learning. These methods rely on heuristic pattern matching and thus lack the systematic framework required for high-stakes architectural decisions. This issue is compounded by the subjectivity inherent in the manual selection process by engineers.

The principal result is the development of a rule-based “atomic taxonomy” method. This approach enabled large-scale models (GPT-5, Gemini-2.5-pro) to achieve perfect predictive performance (1.0 Macro F1-score), while simultaneously degrading the performance of most medium-sized models when compared to the few-shot prompting baseline.

This divergence is explained by the cognitive demands of the task. The proposed method shifts the process from heuristic pattern matching to structured, compositional reasoning. The results indicate that large models possess the necessary architectural capabilities to execute this formal logic, whereas medium-sized models are overwhelmed by its cognitive overhead, making a simpler, example-based approach more effective for them.

In practice, the findings provide a clear, actionable guideline for architects. The atomic taxonomy serves as a robust framework to assist in manual decision-making. For automated support systems, its application is recommended exclusively with large-scale LLMs capable of advanced reasoning. The study concludes that for systems leveraging smaller, more efficient models, traditional few-shot prompting remains the more reliable and superior strategy.

References

- Alongi, F., Bersani, M. M., Ghielmetti, N., Mirandola, R., Tamburri, D. A. (2022). Event‐sourced, observable software architectures: An experience report. Software: Practice and Experience, 52 (10), 2127–2151. https://doi.org/10.1002/spe.3116

- Lima, S., Correia, J., Araujo, F., Cardoso, J. (2021). Improving observability in Event Sourcing systems. Journal of Systems and Software, 181, 111015. https://doi.org/10.1016/j.jss.2021.111015

- Overeem, M., Spoor, M., Jansen, S. (2017). The dark side of event sourcing: Managing data conversion. 2017 IEEE 24th International Conference on Software Analysis, Evolution and Reengineering (SANER). Klagenfurt: IEEE, 193–204. https://doi.org/10.1109/saner.2017.7884621

- Lytvynov, O., Hruzin, D. (2025). Decision-making on Command Query Responsibility Segregation with Event Sourcing architectural variations. Technology Audit and Production Reserves, 4 (2 (84)), 37–59. https://doi.org/10.15587/2706-5448.2025.337168

- Remadi, A., El Hage, K., Hobeika, Y., Bugiotti, F. (2024). To prompt or not to prompt: Navigating the use of Large Language Models for integrating and modeling heterogeneous data. Data & Knowledge Engineering, 152, 102313. https://doi.org/10.1016/j.datak.2024.102313

- Zhou, X., Zhao, X., Li, G. (2024). LLM-Enhanced Data Management. arXiv. https://doi.org/10.48550/arxiv.2402.02643

- Vyshnevskyy, O., Zhuravchak, L. (2025). Combined Large Language Models and Ontology Approach for Energy Consumption Analysis Software. CEUR Workshop Proceedings, 4035, 213–226. Available at: https://ceur-ws.org/Vol-4035/Paper18.pdf

- Ojuri, S., Han, T. A., Chiong, R., Di Stefano, A. (2025). Optimizing text-to-SQL conversion techniques through the integration of intelligent agents and large language models. Information Processing & Management, 62 (5), 104136. https://doi.org/10.1016/j.ipm.2025.104136

- Bajgoti, A., Gupta, R., Dwivedi, R. (2025). ASKSQL: Enabling cost-effective natural language to SQL conversion for enhanced analytics and search. Machine Learning with Applications, 20, 100641. https://doi.org/10.1016/j.mlwa.2025.100641

- Overeem, M., Spoor, M., Jansen, S., Brinkkemper, S. (2021). An empirical characterization of event sourced systems and their schema evolution – Lessons from industry. Journal of Systems and Software, 178, 110970. https://doi.org/10.1016/j.jss.2021.110970

- López Espejel, J., Ettifouri, E. H., Yahaya Alassan, M. S., Chouham, E. M., Dahhane, W. (2023). GPT-3.5, GPT-4, or BARD? Evaluating LLMs reasoning ability in zero-shot setting and performance boosting through prompts. Natural Language Processing Journal, 5, 100032. https://doi.org/10.1016/j.nlp.2023.100032

- Loo, A., Pavlick, E., Feiman, R. (2026). LLMs model how humans induce logically structured rules. Journal of Memory and Language, 146, 104675. https://doi.org/10.1016/j.jml.2025.104675

- Musker, S., Duchnowski, A., Millière, R., Pavlick, E. (2025). LLMs as models for analogical reasoning. Journal of Memory and Language, 145, 104676. https://doi.org/10.1016/j.jml.2025.104676

- Wang, Y., Coiera, E., Gallego, B., Concha, O. P., Ong, M.-S., Tsafnat, G. et al. (2016). Measuring the effects of computer downtime on hospital pathology processes. Journal of Biomedical Informatics, 59, 308–315. https://doi.org/10.1016/j.jbi.2015.12.016

- Klettke, M., Storl, U., Shenavai, M., Scherzinger, S. (2016). NoSQL schema evolution and big data migration at scale. 2016 IEEE International Conference on Big Data (Big Data). Washington: IEEE, 2764–2774. https://doi.org/10.1109/bigdata.2016.7840924

- Carvalho, I., Sá, F., Bernardino, J. (2023). Performance Evaluation of NoSQL Document Databases: Couchbase, CouchDB, and MongoDB. Algorithms, 16 (2), 78. https://doi.org/10.3390/a16020078

- Jolak, R., Karlsson, S., Dobslaw, F. (2025). An empirical investigation of the impact of architectural smells on software maintainability. Journal of Systems and Software, 225, 112382. https://doi.org/10.1016/j.jss.2025.112382

- Fedushko, S., Malyi, R., Syerov, Y., Serdyuk, P. (2024). NoSQL document data migration strategy in the context of schema evolution. Data & Knowledge Engineering, 154, 102369. https://doi.org/10.1016/j.datak.2024.102369

- Chen, B., Zhang, Z., Langrené, N., Zhu, S. (2025). Unleashing the potential of prompt engineering for large language models. Patterns, 6 (6), 101260. https://doi.org/10.1016/j.patter.2025.101260

- Malyi, R., Serdyuk, P. (2025). Test Cases. Zenodo. https://doi.org/10.5281/zenodo.17455591

- Malyi, R., Serdyuk, P. (2025). Few-shot and atomic prompts. Zenodo. https://doi.org/10.5281/zenodo.17455986

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Roman Malyi, Pavlo Serdyuk

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.