Evaluation of the performance of data classification models for aerial imagery under resource constraints

DOI:

https://doi.org/10.15587/2706-5448.2025.323322Keywords:

neural networks, machine learning, image processing, classification, convolutional neural networks, unmanned aerial vehiclesAbstract

The object of the study is the process of aerial imagery data processing under limited computational resources, particularly onboard unmanned aerial vehicles (UAVs) using classification models.

One of the most challenging issues is the adaptation of classification models to scale variations and perspective distortions that occur during UAV maneuvers. Additionally, the high computational complexity of traditional methods, such as sliding window approaches, significantly limits their applicability on resource-constrained devices.

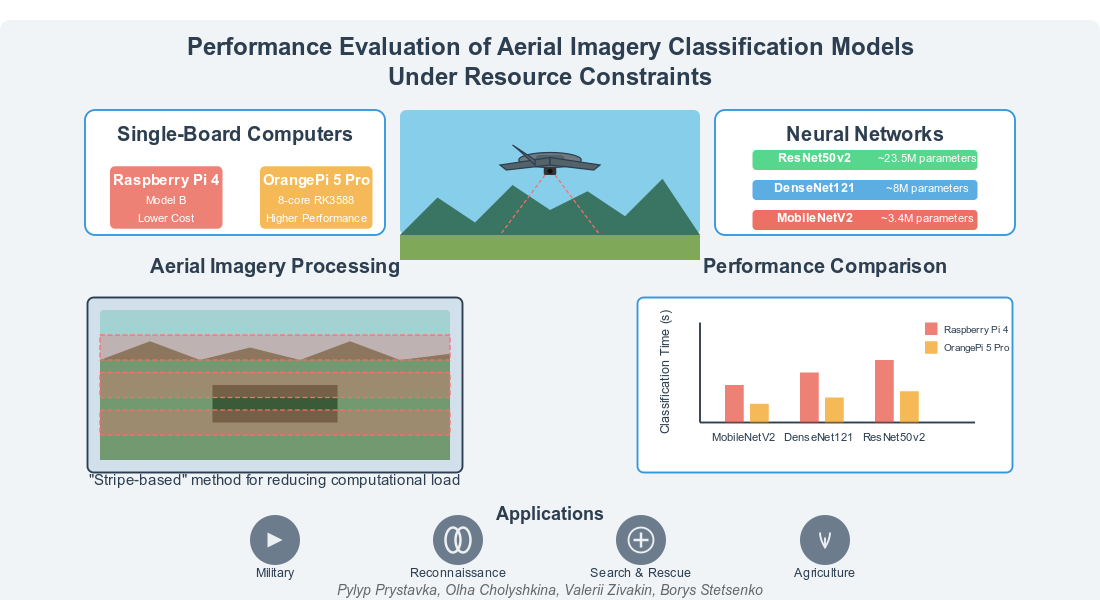

The study utilized state-of-the-art neural network classifiers, including ResNet50v2, DenseNet121, and MobileNetV2, which were fine-tuned on a specialized aerial imagery dataset.

An experimental evaluation of the proposed neural network classifiers was conducted on Raspberry Pi 4 Model B and OrangePi 5 Pro platforms with limited computational power, simulating the constrained resources of UAV systems. To optimize performance, a stripe-based processing approach was proposed for streaming video, ensuring a balance between processing speed and the amount of analyzed data for surveillance applications. Specific execution time evaluations were obtained for different types of classifiers running on single-board computers suitable for UAV deployment.

This approach enables real-time aerial imagery processing, significantly enhancing UAV system autonomy. Compared to traditional methods, the proposed solutions offer advantages such as reduced power consumption, accelerated computations, and improved classification accuracy. These results demonstrate high potential for implementation in various fields, including military operations, reconnaissance, search-and-rescue missions, and agricultural technology applications.

References

- Chyrkov, A., Prystavka, P.; Hu, Z., Petoukhov, S., Dychka, I., He, M. (Eds.) (2018). Suspicious Object Search in Airborne Camera Video Stream. ICCSEEA 2018. Advances in Computer Science for Engineering and Education, 340–348. https://doi.org/10.1007/978-3-319-91008-6_34

- Prystavka, P., Shevchenko, A., Rokitianska, I. (2024). Comparative Analysis of Detector-Tracker Architecture for Object Tracking Based on SBC for UAV. 2024 IEEE 7th International Conference on Actual Problems of Unmanned Aerial Vehicles Development (APUAVD), 175–178. https://doi.org/10.1109/apuavd64488.2024.10765890

- Prystavka, P., Chyrkov, A., Sorokopud, V., Kovtun, V. (2019). Automated Complex for Aerial Reconnaissance Tasks in Modern Armed Conflicts. CEUR Workshop Proceedings, 2588, 57–66.

- Prystavka, P., Cholyshkina, O., Dolgikh, S., Karpenko, D. (2020). Automated Object Recognition System based on Convolutional Autoencoder. 2020 10th International Conference on Advanced Computer Information Technologies (ACIT), 830–833. https://doi.org/10.1109/acit49673.2020.9208945

- Redmon, J., Farhadi, A. (2017). YOLO9000: Better, Faster, Stronger. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 6517–6525. https://doi.org/10.1109/cvpr.2017.690

- Tian, Y., Wang, S., Li, E., Yang, G., Liang, Z., Tan, M. (2023). MD-YOLO: Multi-scale Dense YOLO for small target pest detection. Computers and Electronics in Agriculture, 213, 108233. https://doi.org/10.1016/j.compag.2023.108233

- Fedus, W., Zoph, B., Shazeer, N. (2022). Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity. Journal of Machine Learning Research, 23. https://doi.org/10.48550/arXiv.2101.03961

- Shaw, A., Hunter, D., Landola, F., Sidhu, S. (2019). SqueezeNAS: Fast Neural Architecture Search for Faster Semantic Segmentation. 2019 IEEE/CVF International Conference on Computer Vision Workshop (ICCVW), 2014–2024. https://doi.org/10.1109/iccvw.2019.00251

- Wu, B., Wan, A., Iandola, F., Jin, P. H., Keutzer, K. (2017). SqueezeDet: Unified, Small, Low Power Fully Convolutional Neural Networks for Real-Time Object Detection for Autonomous Driving. 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 446–454. https://doi.org/10.1109/cvprw.2017.60

- Bello, I., Fedus, W., Du, X., Cubuk, E. D., Shlens, J., Zoph, B. et al. (2021). Revisiting ResNets: Improved Training and Scaling Strategies. Advances in Neural. Information Processing Systems, 27, 22614–22627. https://doi.org/10.48550/arXiv.2103.07579

- Tomasello, P., Sidhu, S., Shen, A., Moskewicz, M. W., Redmon, N., Joshi, G. et al. (2019). DSCnet: Replicating Lidar Point Clouds With Deep Sensor Cloning. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 1317–1325. https://doi.org/10.1109/cvprw.2019.00171

- Qin, D., Leichner, C., Delakis, M., Fornoni, M., Luo, S., Yang, F. et al. (2024). MobileNetV4: Universal Models for the Mobile Ecosystem. Computer Vision – ECCV 2024, 78–96. https://doi.org/10.1007/978-3-031-73661-2_5

- Orange Pi 5 vs Raspberry Pi 4 Model B Rev 1.2. Available at: https://browser.geekbench.com/v5/cpu/compare/19357188?baseline=19357599&utm_source=chatgpt.com

- Zivakin, V., Kozachuk, O., Prystavka, P., Cholyshkina, O. (2022). Training set AERIAL SURVEY for Data Recognition Systems From Aerial Surveillance Cameras. CEUR Workshop Proceedings, 3347, 246–255.

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Pylyp Prystavka, Olha Cholyshkina, Valerii Zivakin, Borys Stetsenko

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.