An overview of current issues in automatic text summarization of natural language using artificial intelligence methods

DOI:

https://doi.org/10.15587/2706-5448.2024.309472Keywords:

automatic abstracting, natural language processing, artificial intelligence, generative models, neural networks, deep learningAbstract

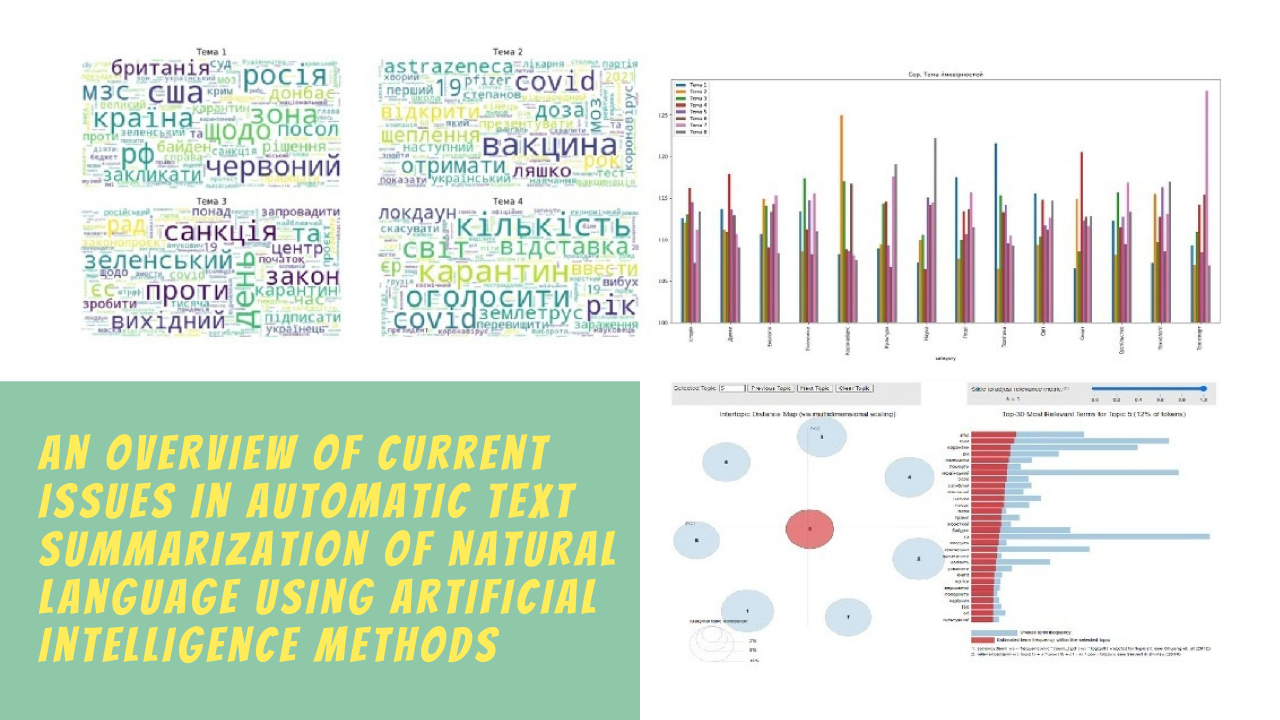

The object of the research is the task of automatic abstracting of natural language texts. The importance of these tasks is determined by the existing problem of creating essays that would adequately reflect the content of the original text and highlight key information. This task requires the ability of models to deeply analyze the context of the text, which complicates the abstracting process.

Results are presented that demonstrate the effectiveness of using generative models based on neural networks, text semantic analysis methods, and deep learning for automatic creation of abstracts. The use of models showed a high level of adequacy and informativeness of abstracts. GPT (Generative Pre-trained Transformer) generates text that looks like it was written by a human, which makes it useful for automatic essay generation.

For example, the GPT model generates abbreviated summaries based on input text, while the BERT model is used for summarizing texts in many areas, including search engines and natural language processing. This allows for short but informative abstracts that retain the essential content of the original and provides the ability to produce high-quality abstracts that can be used for abstracting web pages, emails, social media, and other content. Compared to traditional abstracting methods, artificial intelligence provides such advantages as greater accuracy, informativeness and the ability to process large volumes of text more efficiently, which facilitates access to information and improves productivity in text processing.

Automatic abstracting of texts using artificial intelligence models allows to significantly reduce the time required for the analysis of large volumes of textual information. This is especially important in today's information environment, where the amount of available data is constantly growing. The use of these models promotes efficient use of resources and increases overall productivity in a variety of fields, including scientific research, education, business and media.

References

- Pustejovsky, J., Stubbs, A. (2012). Natural Language Annotation for Machine Learning. Cambridge: Farnham, 343.

- Natural language processing. Available at: https://en.wikipedia.org/wiki/Natural_language_processing

- Luhn, H. P. (1958). The Automatic Creation of Literature Abstracts. IBM Journal of Research and Development, 2 (2), 159–165. https://doi.org/10.1147/rd.22.0159

- Guarino, N., Masolo, C., Vetere, G. (1999). Content-Based Access to the Web. IEEE Intelligent Systems, 70–80.

- Lin, C.-Y., Hovy, E. H. (2000). The Automated acquisition of topic signatures for text summarization. Proceedings of COLING-00. Saarbrücken, 495–501. https://doi.org/10.3115/990820.990892

- Deerwester, S., Dumais, S. T., Furnas, G. W., Landauer, T. K., Harshman, R. (1990). Indexing by latent semantic analysis. Journal of the American Society for Information Science, 41 (6), 391–407. https://doi.org/10.1002/(sici)1097-4571(199009)41:6<391::aid-asi1>3.0.co;2-9

- Gong, Y., Liu, X. (2001). Generic text summarization using relevance measure and latent semantic analysis. Proceedings of the 24th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. ACM, 19–25. https://doi.org/10.1145/383952.383955

- PageRank. Available at: https://en.wikipedia.org/wiki/PageRank

- Kupiec, J., Pedersen, J., Chen, F. (1995). A trainable document summarizer. Proceedings of the 18th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval – SIGIR ’95. ACM, 68–73. https://doi.org/10.1145/215206.215333

- Ouyang, Y., Li, W., Li, S., Lu, Q. (2011). Applying regression models to query-focused multi-document summarization. Information Processing & Management, 47 (2), 227–237. https://doi.org/10.1016/j.ipm.2010.03.005

- Wong, K.-F., Wu, M., Li, W. (2008). Extractive summarization using supervised and semi-supervised learning. Proceedings of the 22nd International Conference on Computational Linguistics – COLING ’08, 985–992. https://doi.org/10.3115/1599081.1599205

- Zhou, L., Hovy, E. (2003). A web-trained extraction summarization system. Proceedings of the 2003 Conference of the North American Chapter of the Association for Computational Linguistics on Human Language Technology – NAACL ’03, 205–211. https://doi.org/10.3115/1073445.1073482

- Conroy, J. M., O’leary, D. P. (2001). Text summarization via hidden Markov models. Proceedings of the 24th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval. ACM, 406–407. https://doi.org/10.1145/383952.384042

- Shen, D., Sun, J.-T., Li, H., Yang, Q., Chen, Z. (2007). Document Summarization Using Conditional Random Fields. IJCAI, 7, 2862–2867.

- SummarizeBot. Available at: https://www.summarizebot.com/about.html

- SMMRY. Available at: https://smmry.com/about

- Generative Pre-trained Transformer. Available at: https://openai.com/chatgpt

- Devlin, J., Chang, M.-W. (2018). Open Sourcing BERT: State-of-the-Art Pre-training for Natural Language Processing. Available at: https://research.google/blog/open-sourcing-bert-state-of-the-art-pre-training-for-natural-language-processing/

- TextTeaser. Available at: https://pypi.org/project/textteaser/

- Answers to Frequently Asked Questions about NLTK (2022). Available at: https://github.com/nltk/nltk/wiki/FAQ

- Gensim. Available at: https://radimrehurek.com/gensim/intro.html#what-is-gensim

- SUMY. Available at: https://github.com/miso-belica/sumy

- Bert-Extractive-Summarizer. Available at: https://github.com/dmmiller612/bert-extractive-summarizer

- Ukrainska pravda. Available at: https://www.pravda.com.ua/

- Pymorphy2 0.9.1. Available at: https://pypi.org/project/pymorphy2/

- Support vector machine. Available at: https://en.wikipedia.org/wiki/Support_vector_machine

- Tag Cloud. Available at: https://en.wikipedia.org/wiki/Tag_cloud

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Oleksii Kuznietsov, Gennadiy Kyselov

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.