Applying machine learning to improve a texture type image

DOI:

https://doi.org/10.15587/1729-4061.2023.275984Keywords:

image processing, SRGAN, ERSGAN, VGG19, MobileNet2V, machine learning, Super-ResolutionAbstract

The paper is devoted to machine learning methods that focus on texture-type image enhancements, namely the improvement of objects in images. The aim of the study is to develop algorithms for improving images and to determine the accuracy of the considered models for improving a given type of images. Although currently used digital imaging systems usually provide high-quality images, external factors or even system limitations can cause images in many areas of science to be of low quality and resolution. Therefore, threshold values for image processing in a certain field of science are considered.

The first step in image processing is image enhancement. The issues of signal image processing remain in the focus of attention of various specialists. Currently, along with the development of information technology, the automatic improvement of images used in any field of science is one of the urgent problems. Images were analyzed as objects: state license plates of cars, faces, sections of the field on satellite images.

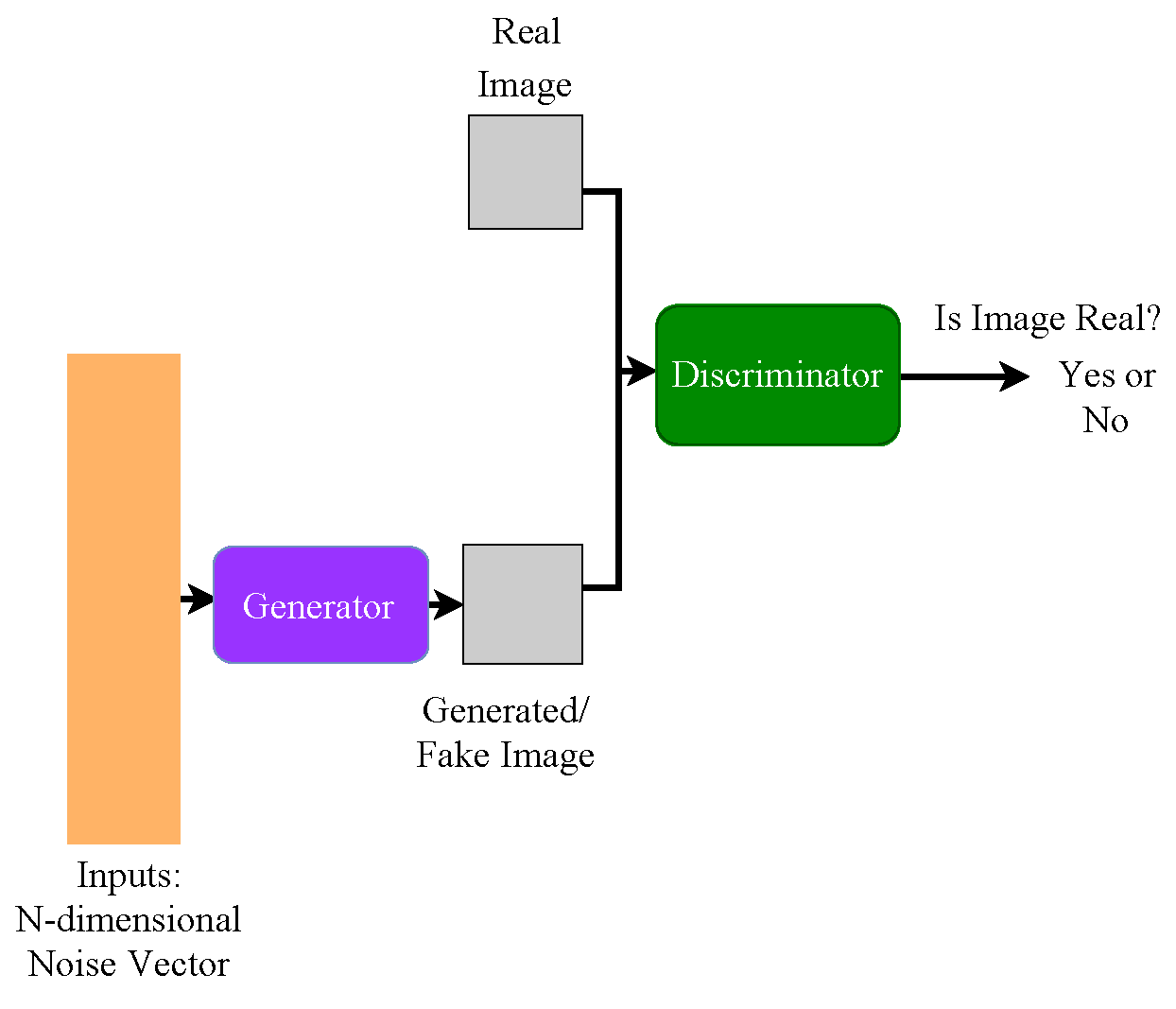

In this work, we propose to use the models of Super-Resolution Generative Adversarial Network (SRGAN), Extended Super-Resolution Generative Adversarial Networks (ERSGAN). For this, an experiment was conducted, the purpose of which was to retrain the trained ESRGAN model with three different architectures of the convolutional neural network, i.e. VGG19, MobileNet2V, ResNet152V2 to add perceptual loss (by pixels), also add more sharpness to the prediction of the test image, and compare the performance of each retrained model. As a result of the study, the use of convolutional neural networks to improve the image showed high accuracy, that is, on average ESRGAN+MobileNETV2 – 91 %, ESRGAN+VGG19 – 86 %, ESRGAN+ResNet152V2 – 96 %.

Supporting Agency

- For providing data on agricultural crops of Northern Kazakhstan in the preparation of this article, the author expresses gratitude to the Scientific and Production Center of Grain Farming named after A. I. Barayev.

References

- Yessenova, M., Abdikerimova, G., Adilova, A., Yerzhanova, A., Kakabayev, N., Ayazbaev, T. et al. (2022). Identification of factors that negatively affect the growth of agricultural crops by methods of orthogonal transformations. Eastern-European Journal of Enterprise Technologies, 3 (2 (117)), 39–47. doi: https://doi.org/10.15587/1729-4061.2022.257431

- Yessenova, M., Abdikerimova, G., Baitemirova, N., Mukhamedrakhimova, G., Mukhamedrakhimov, K., Sattybaeva, Z. et al. (2022). The applicability of informative textural features for the detection of factors negatively influencing the growth of wheat on aerial images. Eastern-European Journal of Enterprise Technologies, 4 (2 (118)), 51–58. doi: https://doi.org/10.15587/1729-4061.2022.263433

- Yessenova, M., Abdikerimova, G., Ayazbaev, T., Murzabekova, G., Ismailova, A., Beldeubayeva, Z. et al. (2023). The effectiveness of methods and algorithms for detecting and isolating factors that negatively affect the growth of crops. International Journal of Electrical and Computer Engineering (IJECE), 13 (2), 1669. doi: https://doi.org/10.11591/ijece.v13i2.pp1669-1679

- Yerzhanova, A., Kassymova, A., Abdikerimova, G., Abdimomynova, M., Tashenova, Z., Nurlybaeva, E. (2021). Analysis of the spectral properties of wheat growth in different vegetation periods. Eastern-European Journal of Enterprise Technologies, 6 (2 (114)), 96–102. doi: https://doi.org/10.15587/1729-4061.2021.249278

- Phiri, D., Simwanda, M., Salekin, S., Nyirenda, V., Murayama, Y., Ranagalage, M. (2020). Sentinel-2 Data for Land Cover/Use Mapping: A Review. Remote Sensing, 12 (14), 2291. doi: https://doi.org/10.3390/rs12142291

- Wang, X., Yu, K., Wu, S., Gu, J., Liu, Y., Dong, C. et al. (2019). ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. Computer Vision – ECCV 2018 Workshops, 63–79. doi: https://doi.org/10.1007/978-3-030-11021-5_5

- Wang, X., Xie, L., Dong, C., Shan, Y. (2021). Real-ESRGAN: Training Real-World Blind Super-Resolution with Pure Synthetic Data. 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW). doi: https://doi.org/10.1109/iccvw54120.2021.00217

- Clabaut, É., Lemelin, M., Germain, M., Bouroubi, Y., St-Pierre, T. (2021). Model Specialization for the Use of ESRGAN on Satellite and Airborne Imagery. Remote Sensing, 13 (20), 4044. doi: https://doi.org/10.3390/rs13204044

- Zouch, W., Sagga, D., Echtioui, A., Khemakhem, R., Ghorbel, M., Mhiri, C., Hamida, A. B. (2022). Detection of COVID-19 from CT and Chest X-ray Images Using Deep Learning Models. Annals of Biomedical Engineering, 50 (7), 825–835. doi: https://doi.org/10.1007/s10439-022-02958-5

- Yamashita, K., Markov, K. (2020). Medical Image Enhancement Using Super Resolution Methods. Computational Science – ICCS 2020, 496–508. doi: https://doi.org/10.1007/978-3-030-50426-7_37

- Dou, X., Li, C., Shi, Q., Liu, M. (2020). Super-Resolution for Hyperspectral Remote Sensing Images Based on the 3D Attention-SRGAN Network. Remote Sensing, 12 (7), 1204. doi: https://doi.org/10.3390/rs12071204

- Zhou, S., Yu, L., Jin, M. (2022). Texture transformer super-resolution for low-dose computed tomography. Biomedical Physics & Engineering Express, 8 (6), 065024. doi: https://doi.org/10.1088/2057-1976/ac9da7

- Kang, X., Liu, L., Ma, H. (2021). ESR-GAN: Environmental Signal Reconstruction Learning With Generative Adversarial Network. IEEE Internet of Things Journal, 8 (1), 636–646. doi: https://doi.org/10.1109/jiot.2020.3018621

- Jaworek-Korjakowska, J., Kleczek, P., Gorgon, M. (2019). Melanoma Thickness Prediction Based on Convolutional Neural Network With VGG-19 Model Transfer Learning. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). doi: https://doi.org/10.1109/cvprw.2019.00333

- Pan, H., Pang, Z., Wang, Y., Wang, Y., Chen, L. (2020). A New Image Recognition and Classification Method Combining Transfer Learning Algorithm and MobileNet Model for Welding Defects. IEEE Access, 8, 119951–119960. doi: https://doi.org/10.1109/access.2020.3005450

- Alrashedy, H. H. N., Almansour, A. F., Ibrahim, D. M., Hammoudeh, M. A. A. (2022). BrainGAN: Brain MRI Image Generation and Classification Framework Using GAN Architectures and CNN Models. Sensors, 22 (11), 4297. doi: https://doi.org/10.3390/s22114297

- Zhang, W., Liu, Y., Dong, C., Qiao, Y. (2019). RankSRGAN: Generative Adversarial Networks With Ranker for Image Super-Resolution. 2019 IEEE/CVF International Conference on Computer Vision (ICCV). doi: https://doi.org/10.1109/iccv.2019.00319

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Jamalbek Tussupov, Kairat Kozhabai, Aigulim Bayegizova, Leila Kassenova, Zhanat Manbetova, Natalya Glazyrina, Bersugir Mukhamedi, Miras Yeginbayev

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.