Reducing the volume of computations when building analogs of neural networks for the first stage of an ensemble classifier with stacking

DOI:

https://doi.org/10.15587/1729-4061.2024.299734Keywords:

multilayer perceptron, neural network, ensemble classifier, weighting coefficients, classification of objects in imagesAbstract

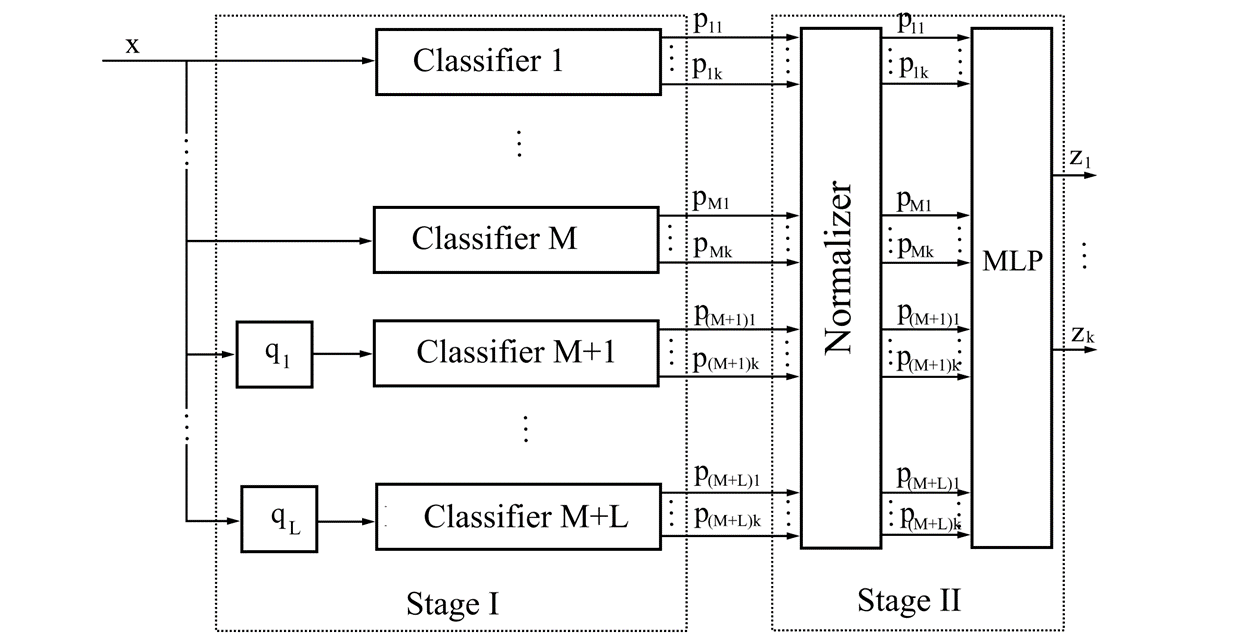

The object of research in this work is ensemble classifiers with stacking, intended for the classification of objects in images with the presence of small sets of labeled data for training. To improve the quality of classification at the first stage of such a classifier, it is necessary to place more primary classifiers that differ in heterogeneous structured processing. However, the number of known neural networks with appropriate characteristics is limited. One approach to solving this problem is to build analogs of known neural networks that make classification errors on other images compared to the base network. The disadvantage of the known methods for constructing such analogs is the need to perform additional floating-point operations. The current paper proposes and investigates a new method to form analogs through random cyclic shifts of rows or columns of input images. This has made it possible to completely eliminate additional floating-point operations. The effectiveness of using this method is explained by the structured processing of input images in basic neural networks. The use of analogs obtained by the proposed method does not impose additional restrictions in practice. This is because the heterogeneity of structured processing in basic neural networks is a typical requirement for them in an ensemble classifier with stacking.

The simulation for the CIFAR-10 data set demonstrated that the proposed technique for constructing analogs allows for a comparative quality of classification by the ensemble classifier. Using MLP-Mixer analogs provided an improvement of 4.6 %, and CCT analogs – 5.9 %

References

- Yang, J., Shi, R., Wei, D., Liu, Z., Zhao, L., Ke, B. et al. (2023). MedMNIST v2 - A large-scale lightweight benchmark for 2D and 3D biomedical image classification. Scientific Data, 10 (1). https://doi.org/10.1038/s41597-022-01721-8

- Islam, Md. R., Nahiduzzaman, Md., Goni, Md. O. F., Sayeed, A., Anower, Md. S., Ahsan, M., Haider, J. (2022). Explainable Transformer-Based Deep Learning Model for the Detection of Malaria Parasites from Blood Cell Images. Sensors, 22 (12), 4358. https://doi.org/10.3390/s22124358

- Yang, X., He, X., Zhao, J., Zhang, Y., Zhang, S., Xie, P. (2020). COVID-CT-dataset: A CT scan dataset about COVID-19. arXiv. https://doi.org/10.48550/arXiv.2003.13865

- Zhang, K., Liu, X., Shen, J., Li, Z., Sang, Y., Wu, X. et al. (2020). Clinically Applicable AI System for Accurate Diagnosis, Quantitative Measurements, and Prognosis of COVID-19 Pneumonia Using Computed Tomography. Cell, 182 (5), 1360. h https://doi.org/10.1016/j.cell.2020.08.029

- Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T. et al. (2020). An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv. https://doi.org/10.48550/arXiv.2010.11929

- Sun, C., Shrivastava, A., Singh, S., Gupta, A. (2017). Revisiting Unreasonable Effectiveness of Data in Deep Learning Era. 2017 IEEE International Conference on Computer Vision (ICCV). https://doi.org/10.1109/iccv.2017.97

- Aggarwal, C. C., Sathe, S. (2017). Outlier Ensembles. An Introduction. Springer International Publishing AG 2017, 276. https://doi.org/10.1007/978-3-319-54765-7

- Galchonkov, O., Babych, M., Zasidko, A., Poberezhnyi, S. (2022). Using a neural network in the second stage of the ensemble classifier to improve the quality of classification of objects in images. Eastern-European Journal of Enterprise Technologies, 3 (9 (117)), 15–21. https://doi.org/10.15587/1729-4061.2022.258187

- Galchonkov, O., Baranov, O., Babych, M., Kuvaieva, V., Babych, Y. (2023). Improving the quality of object classification in images by ensemble classifiers with stacking. Eastern-European Journal of Enterprise Technologies, 3 (9 (123)), 70–77. https://doi.org/10.15587/1729-4061.2023.279372

- Krizhevsky, A., Sutskever, I., Hinton, G. E. (2017). ImageNet classification with deep convolutional neural networks. Communications of the ACM, 60 (6), 84–90. https://doi.org/10.1145/3065386

- He, K., Zhang, X., Ren, S., Sun, J. (2016). Deep Residual Learning for Image Recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr.2016.90

- Li, K., Wang, Y., Zhang, J., Gao, P., Song, G., Liu, Y. et al. (2023). UniFormer: Unifying Convolution and Self-Attention for Visual Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45 (10), 1–18. https://doi.org/10.1109/tpami.2023.3282631

- Hassani, A., Walton, S., Shah, N.,Abuduweili, A., Li, J., Shi, H. (2021). Escaping the Big Data Paradigm with Compact Transformers. arXiv. https://doi.org/10.48550/arXiv.2104.05704

- Gao, A. K. (2023). More for Less: Compact Convolutional Transformers Enable Robust Medical Image Classification with Limited Data. arXiv. https://doi.org/10.48550/arXiv.2307.00213

- Guo, M.-H., Liu, Z.-N., Mu, T.-J., Hu, S.-M. (2022). Beyond Self-Attention: External Attention Using Two Linear Layers for Visual Tasks. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45 (5), 1–13. https://doi.org/10.1109/tpami.2022.3211006

- Lee-Thorp, J., Ainslie, J., Eckstein, I., Ontanon, S. (2022). FNet: Mixing Tokens with Fourier Transforms. Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. https://doi.org/10.18653/v1/2022.naacl-main.319

- Liu, Z., Lin, Y., Cao, Y., Hu, H., Wei, Y., Zhang, Z. et al. (2021). Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. 2021 IEEE/CVF International Conference on Computer Vision (ICCV). https://doi.org/10.1109/iccv48922.2021.00986

- Tolstikhin, I., Houlsby, N., Kolesnikov, A., Beyer, L., Zhai, X., Unterthiner, T. (2021). MLP-Mixer: An all-MLP Architecture for Vision. arXiv. https://doi.org/10.48550/arXiv.2105.01601

- Liu, H., Dai, Z., So, D. R., Le, Q. V. (2021). Pay Attention to MLPs. arXiv. https://doi.org/10.48550/arXiv.2105.08050

- Lian, D., Yu, Z., Sun, X., Gao, S. (2021). AS-MLP: An Axial Shifted MLP Architecture for Vision. arXiv. https://doi.org/10.48550/arXiv.2107.08391

- Wang, Z., Jiang, W., Zhu, Y., Yuan, L., Song, Y., Liu, W. (2022). DynaMixer: A Vision MLP Architecture with Dynamic Mixing. arXiv. https://doi.org/10.48550/arXiv.2201.12083

- Hu, Z., Yu, T. (2023). Dynamic Spectrum Mixer for Visual Recognition. arXiv. https://doi.org/10.48550/arXiv.2309.06721

- Lv, T., Bai, C., Wang, C. (2022). MDMLP: Image Classification from Scratch on Small Datasets with MLP. arXiv. https://doi.org/10.48550/arXiv.2205.14477

- Chen, S., Xie, E., Ge, C., Chen, R., Liang, D., Luo, P. (2023). CycleMLP: A MLP-Like Architecture for Dense Visual Predictions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 45 (12), 14284–14300. https://doi.org/10.1109/tpami.2023.3303397

- Borji, A., Lin, S. (2022). SplitMixer: Fat Trimmed From MLP-like Models. arXiv. https://doi.org/10.48550/arXiv.2207.10255

- Yu, T., Li, X., Cai, Y., Sun, M., Li, P. (2022). S2-MLP: Spatial-Shift MLP Architecture for Vision. 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). https://doi.org/10.1109/wacv51458.2022.00367

- The CIFAR-10 dataset. Available at: https://www.cs.toronto.edu/~kriz/cifar.html

- Primery izobrazheniy i annotatsiy. Available at: https://docs.ultralytics.com/ru/datasets/classify/cifar10/#sample-images-and-annotations

- Brownlee, J. (2019). Better Deep Learning. Available at: https://machinelearningmastery.com/better-deep-learning/

- Code examples. Computer vision. Keras. Available at: https://keras.io/examples/vision/

- Brownlee, J. (2021). Weight Initialization for Deep Learning Neural Networks. Available at: https://machinelearningmastery.com/weight-initialization-for-deep-learning-neural-networks/

- Colab. Available at: https://colab.research.google.com/notebooks/welcome.ipynb

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Oleg Galchonkov, Oleksii Baranov, Petr Chervonenko, Oksana Babilunga

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.