Розробка моделі визначення необхідного обчислювального ресурсу пліс для розміщення на ній багатошарової нейронної мережі

DOI:

https://doi.org/10.15587/1729-4061.2023.281731Ключові слова:

ПЛІС, БШП, ДКЧП, ЗНМ, СНМ, ГЗМАнотація

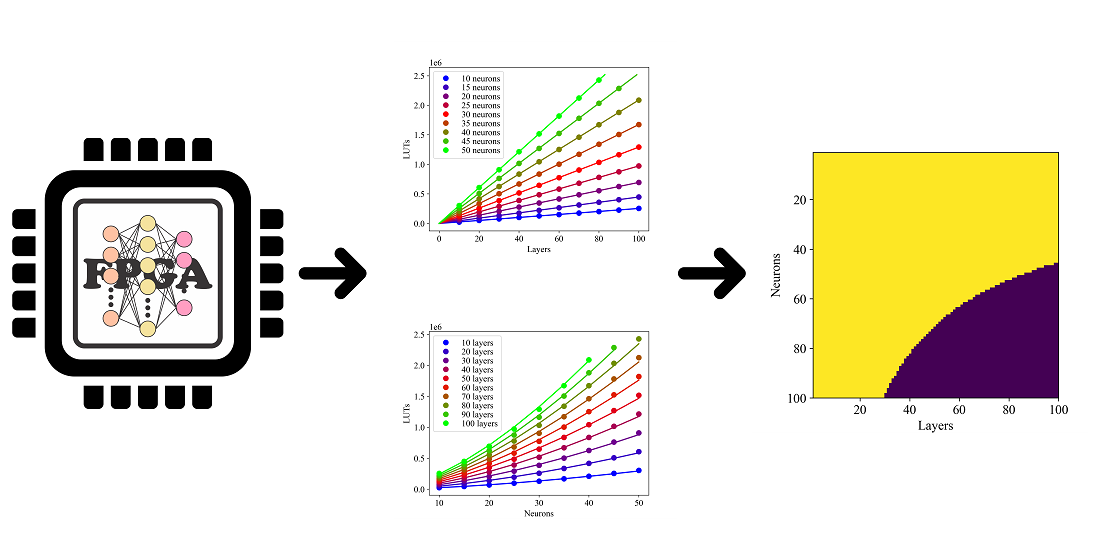

У роботі об’єктом дослідження є реалізація штучних нейронних мереж (ШНМ) на ПЛІС. Вирішуваною задачею є побудова математичної моделі, що використовується для визначення відповідності обчислювальних ресурсів ПЛІС вимогам нейронних мереж залежно від їх типу, структури та розміру. У якості обчислювального ресурсу ПЛІС розглядається кількість її ТП (таблиця пошуку – базова структура ПЛІС, що виконує логічні операції).

Пошук необхідної математичної моделі проводився шляхом експериментальних вимірювань необхідної кількості ТП для реалізації на ПЛІС наступних типів ШНМ:

– БШП (багатошаровий перцептрон);

– ДКЧП (довга короткочасна пам’ять);

– ЗНМ (згорткова нейронна мережа);

– СНМ (спайкова нейронна мережа);

– ГЗМ (генеративно-змагальна мережа).

Експериментальні дослідження проводилися на ПЛІС моделі HAPS-80 S52, в ході яких вимірювалася необхідна кількість ТП в залежності від кількості шарів та кількості нейронів на кожному шарі для вищевказаних типів ШНМ. В результаті дослідження були визначені конкретні типи функцій залежно від необхідної кількості ТП для типу, кількості шарів і нейронів для найбільш часто використовуваних на практиці типів ШНМ.

Особливістю отриманих результатів є те, що з досить високою точністю вдалося визначити аналітичний вид функцій, що описують залежність необхідної кількості ТП ПЛІС для реалізації на ній різних ШНМ. Згідно з розрахунками, ГЗМ використовує в 17 разів менше ТП порівняно з ЗНМ. А СНМ і БШП використовують в 80 і 14 разів менше ТП в порівнянні з ДКЧП. Отримані результати можуть бути використані в практичних цілях при необхідності вибору будь-якої ПЛІС для реалізації на ній ШНМ певного типу і структури

Посилання

- Ádám, N., Baláž, A., Pietriková, E., Chovancová, E., Feciľak, P. (2018). The Impact of Data Representationson Hardware Based MLP Network Implementation. Acta Polytechnica Hungarica, 15 (2). doi: https://doi.org/10.12700/aph.15.1.2018.2.4

- Gaikwad, N. B., Tiwari, V., Keskar, A., Shivaprakash, N. C. (2019). Efficient FPGA Implementation of Multilayer Perceptron for Real-Time Human Activity Classification. IEEE Access, 7, 26696–26706. doi: https://doi.org/10.1109/access.2019.2900084

- Westby, I., Yang, X., Liu, T., Xu, H. (2021). FPGA acceleration on a multi-layer perceptron neural network for digit recognition. The Journal of Supercomputing, 77 (12), 14356–14373. doi: https://doi.org/10.1007/s11227-021-03849-7

- Bai, L., Zhao, Y., Huang, X. (2018). A CNN Accelerator on FPGA Using Depthwise Separable Convolution. IEEE Transactions on Circuits and Systems II: Express Briefs, 65 (10), 1415–1419. doi: https://doi.org/10.1109/tcsii.2018.2865896

- Zhang, N., Wei, X., Chen, H., Liu, W. (2021). FPGA Implementation for CNN-Based Optical Remote Sensing Object Detection. Electronics, 10 (3), 282. doi: https://doi.org/10.3390/electronics10030282

- He, D., He, J., Liu, J., Yang, J., Yan, Q., Yang, Y. (2021). An FPGA-Based LSTM Acceleration Engine for Deep Learning Frameworks. Electronics, 10 (6), 681. doi: https://doi.org/10.3390/electronics10060681

- Shrivastava, N., Hanif, M. A., Mittal, S., Sarangi, S. R., Shafique, M. (2021). A survey of hardware architectures for generative adversarial networks. Journal of Systems Architecture, 118, 102227. doi: https://doi.org/10.1016/j.sysarc.2021.102227

- Wang, D., Shen, J., Wen, M., Zhang, C. (2019). Efficient Implementation of 2D and 3D Sparse Deconvolutional Neural Networks with a Uniform Architecture on FPGAs. Electronics, 8 (7), 803. doi: https://doi.org/10.3390/electronics8070803

- Han, J., Li, Z., Zheng, W., Zhang, Y. (2020). Hardware implementation of spiking neural networks on FPGA. Tsinghua Science and Technology, 25 (4), 479–486. doi: https://doi.org/10.26599/tst.2019.9010019

- Ju, X., Fang, B., Yan, R., Xu, X., Tang, H. (2020). An FPGA Implementation of Deep Spiking Neural Networks for Low-Power and Fast Classification. Neural Computation, 32 (1), 182–204. doi: https://doi.org/10.1162/neco_a_01245

- Medetov, B., Serikov, T., Tolegenova, A., Dauren, Z. (2022). Comparative analysis of the performance of generating cryptographic ciphers on the CPU and FPGA. Journal of Theoretical and Applied Information Technology, 100 (15), 4813–4824. Available at: http://www.jatit.org/volumes/Vol100No15/24Vol100No15.pdf

- Creswell, A., White, T., Dumoulin, V., Arulkumaran, K., Sengupta, B., Bharath, A. A. (2018). Generative Adversarial Networks: An Overview. IEEE Signal Processing Magazine, 35 (1), 53–65. doi: https://doi.org/10.1109/msp.2017.2765202

##submission.downloads##

Опубліковано

Як цитувати

Номер

Розділ

Ліцензія

Авторське право (c) 2023 Bekbolat Medetov, Tansaule Serikov, Arai Tolegenova, Dauren Zhexebay, Asset Yskak, Timur Namazbayev, Nurtay Albanbay

Ця робота ліцензується відповідно до Creative Commons Attribution 4.0 International License.

Закріплення та умови передачі авторських прав (ідентифікація авторства) здійснюється у Ліцензійному договорі. Зокрема, автори залишають за собою право на авторство свого рукопису та передають журналу право першої публікації цієї роботи на умовах ліцензії Creative Commons CC BY. При цьому вони мають право укладати самостійно додаткові угоди, що стосуються неексклюзивного поширення роботи у тому вигляді, в якому вона була опублікована цим журналом, але за умови збереження посилання на першу публікацію статті в цьому журналі.

Ліцензійний договір – це документ, в якому автор гарантує, що володіє усіма авторськими правами на твір (рукопис, статтю, тощо).

Автори, підписуючи Ліцензійний договір з ПП «ТЕХНОЛОГІЧНИЙ ЦЕНТР», мають усі права на подальше використання свого твору за умови посилання на наше видання, в якому твір опублікований. Відповідно до умов Ліцензійного договору, Видавець ПП «ТЕХНОЛОГІЧНИЙ ЦЕНТР» не забирає ваші авторські права та отримує від авторів дозвіл на використання та розповсюдження публікації через світові наукові ресурси (власні електронні ресурси, наукометричні бази даних, репозитарії, бібліотеки тощо).

За відсутності підписаного Ліцензійного договору або за відсутністю вказаних в цьому договорі ідентифікаторів, що дають змогу ідентифікувати особу автора, редакція не має права працювати з рукописом.

Важливо пам’ятати, що існує і інший тип угоди між авторами та видавцями – коли авторські права передаються від авторів до видавця. В такому разі автори втрачають права власності на свій твір та не можуть його використовувати в будь-який спосіб.