Development of object detection from point clouds of a 3D dataset by Point-Pillars neural network

DOI:

https://doi.org/10.15587/1729-4061.2023.275155Keywords:

object detection, point clouds, point-pillars, deep learning convolutional neural networkAbstract

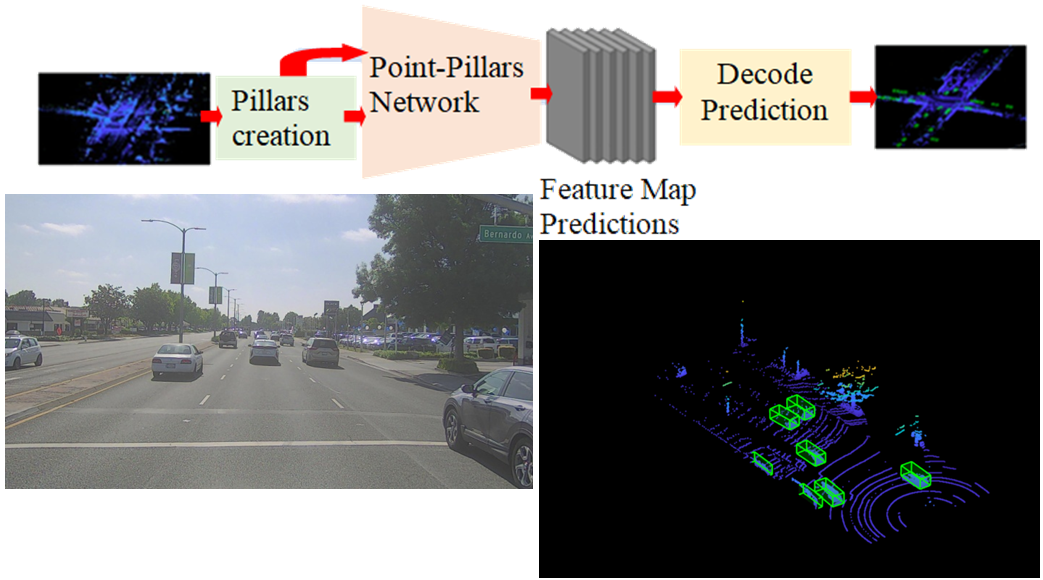

Deep learning algorithms are able to automatically handle point clouds over a broad range of 3D imaging implementations. They have applications in advanced driver assistance systems, perception and robot navigation, scene classification, surveillance, stereo vision, and depth estimation. According to prior studies, the detection of objects from point clouds of a 3D dataset with acceptable accuracy is still a challenging task. The Point-Pillars technique is used in this work to detect a 3D object employing 2D convolutional neural network (CNN) layers. Point-Pillars architecture includes a learnable encoder to use Point-Nets for learning a demonstration of point clouds structured with vertical columns (pillars). The Point-Pillars architecture operates a 2D CNN to decode the predictions, create network estimations, and create 3D envelop boxes for various object labels like pedestrians, trucks, and cars. This study aims to detect objects from point clouds of a 3D dataset by Point-Pillars neural network architecture that makes it possible to detect a 3D object by means of 2D convolutional neural network (CNN) layers. The method includes producing a sparse pseudo-image from a point cloud using a feature encoder, using a 2D convolution backbone to process the pseudo-image into high-level, and using detection heads to regress and detect 3D bounding boxes. This work utilizes an augmentation for ground truth data as well as additional augmentations of global data methods to include further diversity in the data training and associating packs. The obtained results demonstrated that the average orientation similarity (AOS) and average precision (AP) were 0.60989, 0.61157 for trucks, and 0.74377, 0.75569 for cars.

Supporting Agency

- The authors would like to express their deepest gratitude to the Northern Technical University, Mosul- Iraq for their support to complete this research.

References

- Geiger, A., Lenz, P., Urtasun, R. (2012). Are we ready for autonomous driving? The KITTI vision benchmark suite. 2012 IEEE Conference on Computer Vision and Pattern Recognition. doi: https://doi.org/10.1109/cvpr.2012.6248074

- Fuseya, Y., Kariyado, T., Ogata, M. (2009). Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite Andreas. Journal of the Physical Society of Japan.

- Song, X., Wang, P., Zhou, D., Zhu, R., Guan, C., Dai, Y. et al. (2019). ApolloCar3D: A Large 3D Car Instance Understanding Benchmark for Autonomous Driving. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). doi: https://doi.org/10.1109/cvpr.2019.00560

- Liu, H., Guo, Y., Ma, Y., Lei, Y., Wen, G. (2021). Semantic Context Encoding for Accurate 3D Point Cloud Segmentation. IEEE Transactions on Multimedia, 23, 2045–2055. doi: https://doi.org/10.1109/tmm.2020.3007331

- Dai, A., Chang, A. X., Savva, M., Halber, M., Funkhouser, T., Niessner, M. (2017). ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi: https://doi.org/10.1109/cvpr.2017.261

- Rossi, D., Veglia, P., Sammarco, M., Larroca, F. (2012). ModelNet-TE: An emulation tool for the study of P2P and traffic engineering interaction dynamics. Peer-to-Peer Networking and Applications, 6 (2), 194–212. doi: https://doi.org/10.1007/s12083-012-0134-x

- Sabry, A. H., Nordin, F. H., Sabry, A. H., Abidin Ab Kadir, M. Z. (2020). Fault Detection and Diagnosis of Industrial Robot Based on Power Consumption Modeling. IEEE Transactions on Industrial Electronics, 67 (9), 7929–7940. doi: https://doi.org/10.1109/tie.2019.2931511

- Nazir, D., Afzal, M. Z., Pagani, A., Liwicki, M., Stricker, D. (2021). Contrastive Learning for 3D Point Clouds Classification and Shape Completion. Sensors, 21 (21), 7392. doi: https://doi.org/10.3390/s21217392

- Hamza, E., Aziez, S., Hummadi, F., Sabry, A. (2022). Classifying wireless signal modulation sorting using convolutional neural network. Eastern-European Journal of Enterprise Technologies, 6 (9 (120)), 70–79. doi: https://doi.org/10.15587/1729-4061.2022.266801

- Fernandes, D., Silva, A., Névoa, R., Simões, C., Gonzalez, D., Guevara, M. et al. (2021). Point-cloud based 3D object detection and classification methods for self-driving applications: A survey and taxonomy. Information Fusion, 68, 161–191. doi: https://doi.org/10.1016/j.inffus.2020.11.002

- Zhou, Z., Gong, J. (2018). Automated residential building detection from airborne LiDAR data with deep neural networks. Advanced Engineering Informatics, 36, 229–241. doi: https://doi.org/10.1016/j.aei.2018.04.002

- Qing, L., Yang, K., Tan, W., Li, J. (2020). Automated Detection of Manhole Covers in MLS Point Clouds Using a Deep Learning Approach. IGARSS 2020 - 2020 IEEE International Geoscience and Remote Sensing Symposium. doi: https://doi.org/10.1109/igarss39084.2020.9324137

- Lang, A. H., Vora, S., Caesar, H., Zhou, L., Yang, J., Beijbom, O. (2019). PointPillars: Fast Encoders for Object Detection From Point Clouds. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). doi: https://doi.org/10.1109/cvpr.2019.01298

- Tu, J., Wang, P., Liu, F. (2021). PP-RCNN: Point-Pillars Feature Set Abstraction for 3D Real-time Object Detection. 2021 International Joint Conference on Neural Networks (IJCNN). doi: https://doi.org/10.1109/ijcnn52387.2021.9534098

- Hu, T., Sun, X., Su, Y., Guan, H., Sun, Q., Kelly, M., Guo, Q. (2020). Development and Performance Evaluation of a Very Low-Cost UAV-Lidar System for Forestry Applications. Remote Sensing, 13 (1), 77. doi: https://doi.org/10.3390/rs13010077

- Guan, L., Chen, Y., Wang, G., Lei, X. (2020). Real-Time Vehicle Detection Framework Based on the Fusion of LiDAR and Camera. Electronics, 9 (3), 451. doi: https://doi.org/10.3390/electronics9030451

- Shin, M.-O., Oh, G.-M., Kim, S.-W., Seo, S.-W. (2017). Real-Time and Accurate Segmentation of 3-D Point Clouds Based on Gaussian Process Regression. IEEE Transactions on Intelligent Transportation Systems, 18 (12), 3363–3377. doi: https://doi.org/10.1109/tits.2017.2685523

- Cai, H., Rasdorf, W. (2008). Modeling Road Centerlines and Predicting Lengths in 3-D Using LIDAR Point Cloud and Planimetric Road Centerline Data. Computer-Aided Civil and Infrastructure Engineering, 23 (3), 157–173. doi: https://doi.org/10.1111/j.1467-8667.2008.00518.x

- Marshall, M. R., Hellfeld, D., Joshi, T. H. Y., Salathe, M., Bandstra, M. S., Bilton, K. J. et al. (2021). 3-D Object Tracking in Panoramic Video and LiDAR for Radiological Source–Object Attribution and Improved Source Detection. IEEE Transactions on Nuclear Science, 68 (2), 189–202. doi: https://doi.org/10.1109/tns.2020.3047646

- Xiao, P., Shao, Z., Hao, S., Zhang, Z., Chai, X., Jiao, J. et al. (2021). PandaSet: Advanced Sensor Suite Dataset for Autonomous Driving. 2021 IEEE International Intelligent Transportation Systems Conference (ITSC). doi: https://doi.org/10.1109/itsc48978.2021.9565009

- Shi, W., Rajkumar, R. (2020). Point-GNN: Graph Neural Network for 3D Object Detection in a Point Cloud. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). doi: https://doi.org/10.1109/cvpr42600.2020.00178

- Song, S., Lichtenberg, S. P., Xiao, J. (2015). SUN RGB-D: A RGB-D scene understanding benchmark suite. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR). doi: https://doi.org/10.1109/cvpr.2015.7298655

- Geiger, A., Lenz, P., Stiller, C., Urtasun, R. (2013). Vision meets robotics: The KITTI dataset. The International Journal of Robotics Research, 32 (11), 1231–1237. doi: https://doi.org/10.1177/0278364913491297

- Qi, C. R., Litany, O., He, K., Guibas, L. (2019). Deep Hough Voting for 3D Object Detection in Point Clouds. 2019 IEEE/CVF International Conference on Computer Vision (ICCV). doi: https://doi.org/10.1109/iccv.2019.00937

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Omar I. Dallal Bashi, Husamuldeen K. Hameed, Yasir Mahmood Al Kubaiaisi, Ahmad H. Sabry

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.