Development of a model for determining the necessary FPGA computing resource for placing a multilayer neural network on it

DOI:

https://doi.org/10.15587/1729-4061.2023.281731Keywords:

FPGA, MLP, LSTM, CNN, SNN, GANAbstract

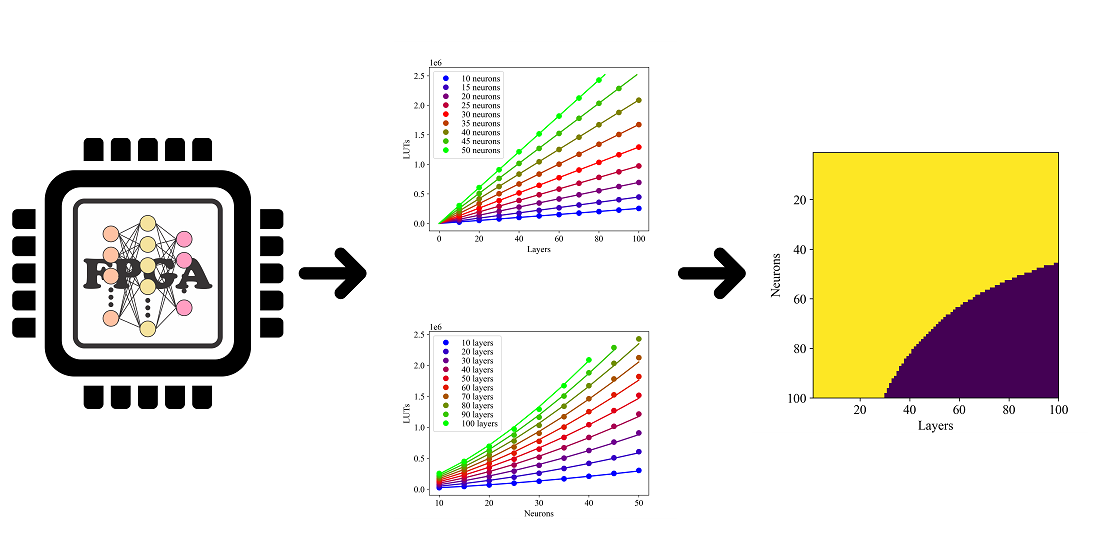

In this paper, the object of the research is the implementation of artificial neural networks (ANN) on FPGA. The problem to be solved is the construction of a mathematical model used to determine the compliance of FPGA computing resources with the requirements of neural networks, depending on their type, structure, and size. The number of its LUT (Look-up table – the basic FPGA structure that performs logical operations) is considered as a computing resource of the FPGA.

The search for the required mathematical model was carried out using experimental measurements of the required number of LUTs for the implementation on the FPGA of the following types of ANNs:

– MLP (Multilayer Perceptron);

– LSTM (Long Short-Term Memory);

– CNN (Convolutional Neural Network);

– SNN (Spiking Neural Network);

– GAN (Generative Adversarial Network).

Experimental studies were carried out on the FPGA model HAPS-80 S52, during which the required number of LUTs was measured depending on the number of layers and the number of neurons on each layer for the above types of ANNs. As a result of the research, specific types of functions depending on the required number of LUTs on the type, number of layers, and neurons for the most commonly used types of ANNs in practice were determined.

A feature of the results obtained is the fact that with a sufficiently high accuracy, it was possible to determine the analytical form of the functions that describe the dependence of the required number of LUT FPGA for the implementation of various ANNs on it. According to calculations, GAN uses 17 times less LUT compared to CNN. And SNN and MLP use 80 and 14 times less LUT compared to LSTM. The results obtained can be used for practical purposes when it is necessary to make a choice of any FPGA for the implementation of an ANN of a certain type and structure on it

References

- Ádám, N., Baláž, A., Pietriková, E., Chovancová, E., Feciľak, P. (2018). The Impact of Data Representationson Hardware Based MLP Network Implementation. Acta Polytechnica Hungarica, 15 (2). doi: https://doi.org/10.12700/aph.15.1.2018.2.4

- Gaikwad, N. B., Tiwari, V., Keskar, A., Shivaprakash, N. C. (2019). Efficient FPGA Implementation of Multilayer Perceptron for Real-Time Human Activity Classification. IEEE Access, 7, 26696–26706. doi: https://doi.org/10.1109/access.2019.2900084

- Westby, I., Yang, X., Liu, T., Xu, H. (2021). FPGA acceleration on a multi-layer perceptron neural network for digit recognition. The Journal of Supercomputing, 77 (12), 14356–14373. doi: https://doi.org/10.1007/s11227-021-03849-7

- Bai, L., Zhao, Y., Huang, X. (2018). A CNN Accelerator on FPGA Using Depthwise Separable Convolution. IEEE Transactions on Circuits and Systems II: Express Briefs, 65 (10), 1415–1419. doi: https://doi.org/10.1109/tcsii.2018.2865896

- Zhang, N., Wei, X., Chen, H., Liu, W. (2021). FPGA Implementation for CNN-Based Optical Remote Sensing Object Detection. Electronics, 10 (3), 282. doi: https://doi.org/10.3390/electronics10030282

- He, D., He, J., Liu, J., Yang, J., Yan, Q., Yang, Y. (2021). An FPGA-Based LSTM Acceleration Engine for Deep Learning Frameworks. Electronics, 10 (6), 681. doi: https://doi.org/10.3390/electronics10060681

- Shrivastava, N., Hanif, M. A., Mittal, S., Sarangi, S. R., Shafique, M. (2021). A survey of hardware architectures for generative adversarial networks. Journal of Systems Architecture, 118, 102227. doi: https://doi.org/10.1016/j.sysarc.2021.102227

- Wang, D., Shen, J., Wen, M., Zhang, C. (2019). Efficient Implementation of 2D and 3D Sparse Deconvolutional Neural Networks with a Uniform Architecture on FPGAs. Electronics, 8 (7), 803. doi: https://doi.org/10.3390/electronics8070803

- Han, J., Li, Z., Zheng, W., Zhang, Y. (2020). Hardware implementation of spiking neural networks on FPGA. Tsinghua Science and Technology, 25 (4), 479–486. doi: https://doi.org/10.26599/tst.2019.9010019

- Ju, X., Fang, B., Yan, R., Xu, X., Tang, H. (2020). An FPGA Implementation of Deep Spiking Neural Networks for Low-Power and Fast Classification. Neural Computation, 32 (1), 182–204. doi: https://doi.org/10.1162/neco_a_01245

- Medetov, B., Serikov, T., Tolegenova, A., Dauren, Z. (2022). Comparative analysis of the performance of generating cryptographic ciphers on the CPU and FPGA. Journal of Theoretical and Applied Information Technology, 100 (15), 4813–4824. Available at: http://www.jatit.org/volumes/Vol100No15/24Vol100No15.pdf

- Creswell, A., White, T., Dumoulin, V., Arulkumaran, K., Sengupta, B., Bharath, A. A. (2018). Generative Adversarial Networks: An Overview. IEEE Signal Processing Magazine, 35 (1), 53–65. doi: https://doi.org/10.1109/msp.2017.2765202

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Bekbolat Medetov, Tansaule Serikov, Arai Tolegenova, Dauren Zhexebay, Asset Yskak, Timur Namazbayev, Nurtay Albanbay

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.