Development of a hybrid CNN-RNN model for enhanced recognition of dynamic gestures in Kazakh Sign Language

DOI:

https://doi.org/10.15587/1729-4061.2025.315834Keywords:

dynamic gesture recognition, hybrid neural network, Kazakh Sign Language, facial expressionsAbstract

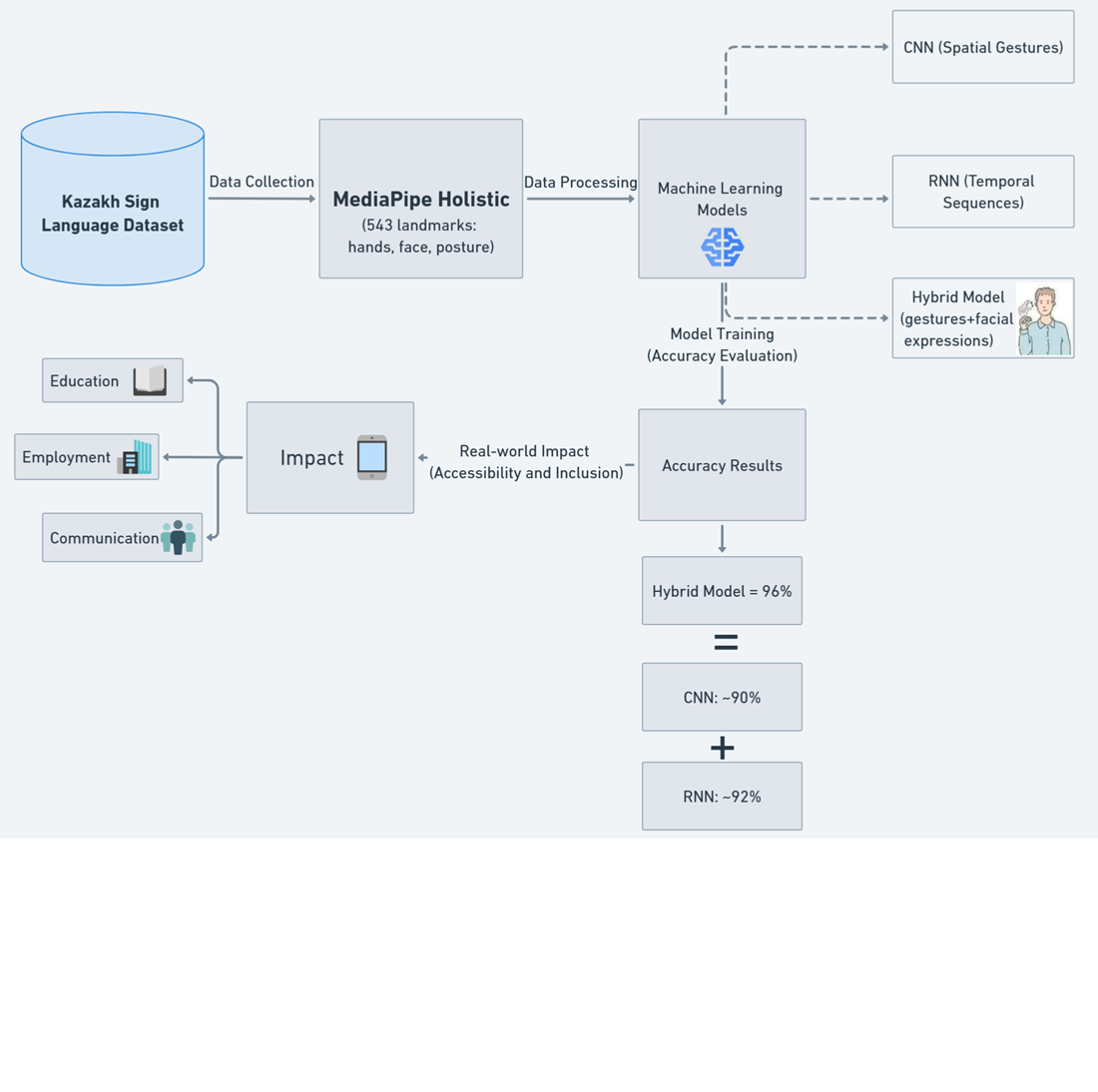

With around 1 % of the population of the Republic of Kazakhstan being affected by hearing disabilities, Kazakh Sign Language holds great importance as a means of communication between citizens of the state. The limitations of tools for Kazakh Sign Language (KSL) create significant challenges for people with hearing impairments in education, employment, and daily interactions. This research addresses these challenges through the development of an automated recognition system for Kazakh Sign Language gestures, aiming to enhance accessibility and inclusivity of communication using artificial intelligence. The approach employs advanced machine learning techniques, including Convolutional Neural Networks (CNNs) for recognizing spatial gesture patterns and Recurrent Neural Networks (RNNs) for processing temporal sequences. By combining these methods, the system recognizes both hand gestures and facial expressions, providing a dual-stream model that surpasses single-stream gesture recognition systems focused solely on hand movements. A dedicated dataset was created using Mediapipe Holistic, an open-source tool that identifies 543 landmarks across hands, faces, and poses, effectively capturing the multifaceted nature of sign language. The findings showed that the hybrid model significantly outperformed standalone CNN and RNN models, achieving up to 96 % accuracy. This demonstrates that integrating facial expressions with hand gestures greatly enhances the precision of sign language recognition. This system holds immense potential to improve inclusivity and accessibility in various settings across the Republic of Kazakhstan by facilitating communication for hearing-impaired individuals, paving the way for expanded research and application in other sign languages

References

- In Almaty, more than 10,000 people live with hearing disabilities (2022). Vecher.kz. Available at: https://vecher.kz/ru/article/v-almaty-projivaiut-bolee-10-tysiach-liudei-imeiushih-invalidnost-po-sluhu.html

- People with hearing disabilities find it difficult to obtain a profession (2024). 24KZ. Available at: https://24.kz/ru/news/social/item/675566-lyudyam-s-invalidnostyu-po-slukhu-slozhno-poluchit-professiyu

- On the demographic situation for January-September 2024 (2024). Statistical Committee of the Ministry of National Economy of the Republic of Kazakhstan. Available at: https://stat.gov.kz/ru/news/o-demograficheskoy-situatsii-za-yanvar-sentyabr-2024-goda/

- Ibadullaeva, A. (2023). Guides from the World of Silence: How Sign Language Interpreters Work in Kazakhstan. Liter.kz. Available at: https://liter.kz/provodniki-iz-mira-tishiny-kak-rabotaiut-surdoperevodchiki-v-kazakhstane-1676962474/

- Cheh, E. (2024). Acute shortage of hearing impairment educators in East Kazakhstan. Ustinka LIVE. Available at: https://ustinka.kz/vko/97381.html

- Bora, J., Dehingia, S., Boruah, A., Chetia, A. A., Gogoi, D. (2023). Real-time Assamese Sign Language Recognition using MediaPipe and Deep Learning. Procedia Computer Science, 218, 1384–1393. https://doi.org/10.1016/j.procs.2023.01.117

- Salau, A. O., Tamiru, N. K., Abeje, B. T. (2024). Derived Amharic alphabet sign language recognition using machine learning methods. Heliyon, 10 (19), e38265. https://doi.org/10.1016/j.heliyon.2024.e38265

- Katoch, S., Singh, V., Tiwary, U. S. (2022). Indian Sign Language recognition system using SURF with SVM and CNN. Array, 14, 100141. https://doi.org/10.1016/j.array.2022.100141

- Singh, D. K. (2021). 3D-CNN based Dynamic Gesture Recognition for Indian Sign Language Modeling. Procedia Computer Science, 189, 76–83. https://doi.org/10.1016/j.procs.2021.05.071

- Ibrahim, N. B., Selim, M. M., Zayed, H. H. (2018). An Automatic Arabic Sign Language Recognition System (ArSLRS). Journal of King Saud University - Computer and Information Sciences, 30 (4), 470–477. https://doi.org/10.1016/j.jksuci.2017.09.007

- Indra, D., Purnawansyah, Madenda, S., Wibowo, E. P. (2019). Indonesian Sign Language Recognition Based on Shape of Hand Gesture. Procedia Computer Science, 161, 74–81. https://doi.org/10.1016/j.procs.2019.11.101

- MediaPipe Holistic – Simultaneous Face, Hand and Pose Prediction, on Device (2020). Google Research Blog. Available at: https://research.google/blog/mediapipe-holistic-simultaneous-face-hand-and-pose-prediction-on-device/

- Chansri, C., Srinonchat, J. (2016). Hand Gesture Recognition for Thai Sign Language in Complex Background Using Fusion of Depth and Color Video. Procedia Computer Science, 86, 257–260. https://doi.org/10.1016/j.procs.2016.05.113

- Shin, J., Miah, A. S. M., Konnai, S., Takahashi, I., Hirooka, K. (2024). Hand gesture recognition using sEMG signals with a multi-stream time-varying feature enhancement approach. Scientific Reports, 14 (1). https://doi.org/10.1038/s41598-024-72996-7

- Papadimitriou, K., Sapountzaki, G., Vasilaki, K., Efthimiou, E., Fotinea, S.-E., Potamianos, G. (2024). A large corpus for the recognition of Greek Sign Language gestures. Computer Vision and Image Understanding, 249, 104212. https://doi.org/10.1016/j.cviu.2024.104212

- Shin, J., Hasan, Md. A. M., Miah, A. S. M., Suzuki, K., Hirooka, K. (2024). Japanese Sign Language Recognition by Combining Joint Skeleton-Based Handcrafted and Pixel-Based Deep Learning Features with Machine Learning Classification. Computer Modeling in Engineering & Sciences, 139 (3), 2605–2625. https://doi.org/10.32604/cmes.2023.046334

- Aitim, A., Satybaldiyeva, R. (2025). A comparison of Kazakh language processing models for improving semantic search results. Eastern-European Journal of Enterprise Technologies, 1 (2 (133)), 66–75. https://doi.org/10.15587/1729-4061.2025.315954

- Kenshimov, C., Buribayev, Z., Amirgaliyev, Y., Ataniyazova, A., Aitimov, A. (2021). Sign language dactyl recognition based on machine learning algorithms. Eastern-European Journal of Enterprise Technologies, 4 (2 (112)), 58–72. https://doi.org/10.15587/1729-4061.2021.239253

- Amirgaliyev, Y., Ataniyazova, A., Buribayev, Z., Zhassuzak, M., Urmashev, B., Cherikbayeva, L. (2024). Application of neural networks ensemble method for the Kazakh sign language recognition. Bulletin of Electrical Engineering and Informatics, 13 (5), 3275–3287. https://doi.org/10.11591/eei.v13i5.7803

- Dey, A., Biswas, S., Le, D.-N. (2024). Recognition of Wh-Question Sign Gestures in Video Streams using an Attention Driven C3D-BiLSTM Network. Procedia Computer Science, 235, 2920–2931. https://doi.org/10.1016/j.procs.2024.04.276

- Satybaldiyeva, R., Uskenbayeva, R., Moldagulova, A., Kalpeyeva, Z., Aitim, A. (2019). Features of Administrative and Management Processes Modeling. Optimization of Complex Systems: Theory, Models, Algorithms and Applications, 842–849. https://doi.org/10.1007/978-3-030-21803-4_84

- Athira, P. K., Sruthi, C. J., Lijiya, A. (2022). A Signer Independent Sign Language Recognition with Co-articulation Elimination from Live Videos: An Indian Scenario. Journal of King Saud University - Computer and Information Sciences, 34 (3), 771–781. https://doi.org/10.1016/j.jksuci.2019.05.002

- Rao, G. A., Kishore, P. V. V. (2018). Selfie video based continuous Indian sign language recognition system. Ain Shams Engineering Journal, 9 (4), 1929–1939. https://doi.org/10.1016/j.asej.2016.10.013

- Aitim, A. K., Satybaldiyeva, R. Zh., Wojcik, W. (2020). The construction of the Kazakh language thesauri in automatic word processing system. Proceedings of the 6th International Conference on Engineering & MIS 2020, 1–4. https://doi.org/10.1145/3410352.3410789

- Nuralin, M., Daineko, Y., Aljawarneh, S., Tsoy, D., Ipalakova, M. (2024). The real-time hand and object recognition for virtual interaction. PeerJ Computer Science, 10, e2110. https://doi.org/10.7717/peerj-cs.2110

- Kolesnikova, K., Mezentseva, O., Savielieva, O. (2019). Modeling of Decision Making Strategies In Management of Steelmaking Processes. 2019 IEEE International Conference on Advanced Trends in Information Theory (ATIT), 455–460. https://doi.org/10.1109/atit49449.2019.9030524

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Aigerim Aitim, Dariga Sattarkhuzhayeva, Aisulu Khairullayeva

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.