Devising a method to form reference images to provide high-precision navigation for unmanned aerial vehicles when changing geometric viewing conditions

DOI:

https://doi.org/10.15587/1729-4061.2025.330905Keywords:

reference images, navigation parameters, discrete in viewing angles and altitudes, information features, decision functionAbstract

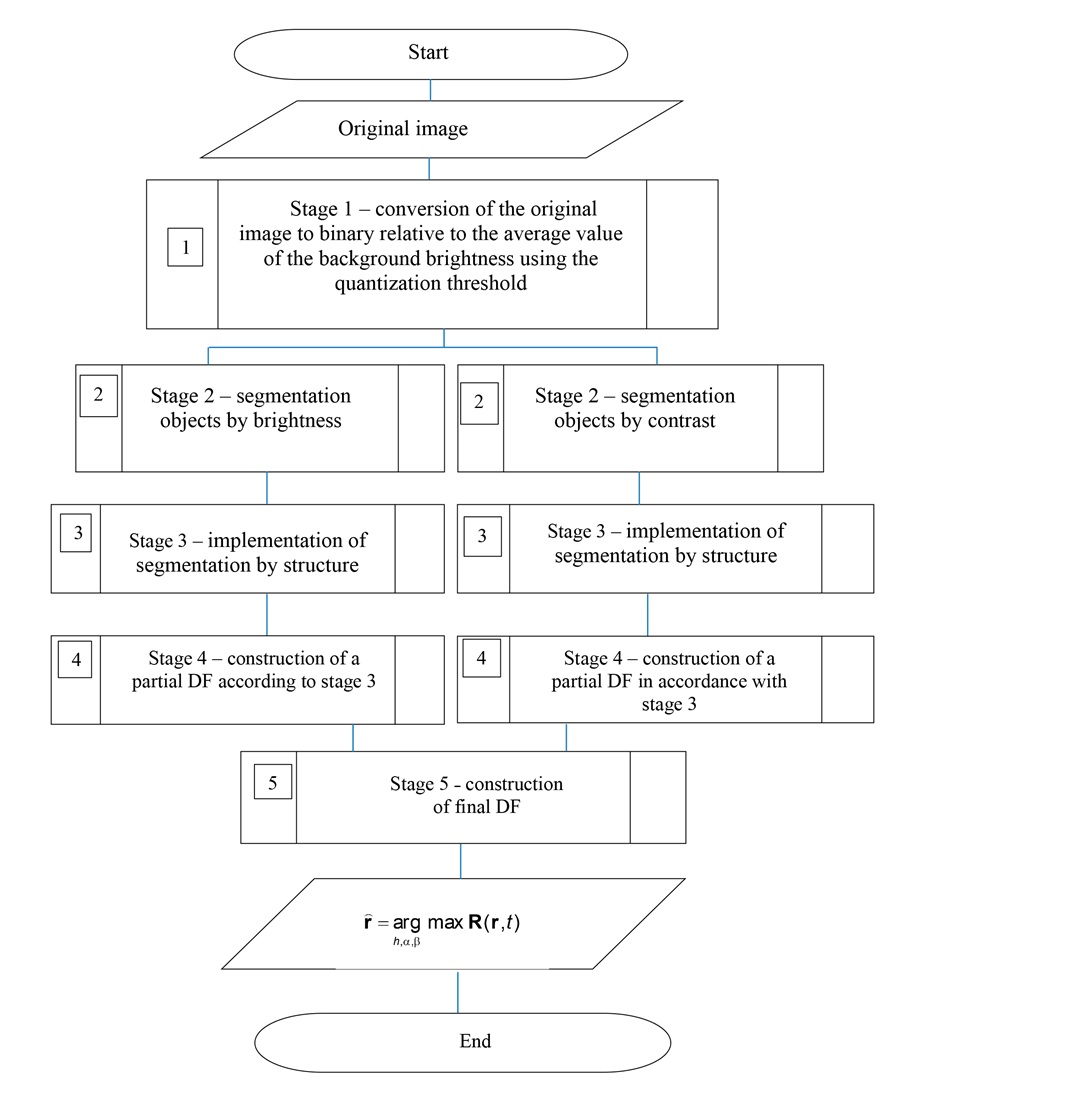

The object of this study is the process of forming a minimally sufficient set of reference images for use in correlation-extreme navigation systems when changing the navigation parameters of unmanned aerial vehicles. The paper reports the results related to solving the task to form a set of reference images taking into account changes in the navigation parameters of high-speed unmanned aerial vehicles and their impact on the information features of images. The effect of changing the viewing angles and altitude on the formation of segmented images by energy characteristics has been studied. The discrete steps for navigation parameters were established, at which the correlation between image fragments is maintained at the level of 0.9. These values are from 90 to 120 meters in height and from 15° to 25° in angular parameters. The effect of the structure of segmented images on the selection of the reference object has been studied. It is shown that the feature of the selection of the reference object in the segmented image is the value of the fractal dimension 2.998…2.999.

The study was conducted in the MATLAB software environment using the source image selected from Google Earth Pro. The application of the selected sequence of constructing fragments of reference images has made it possible to identify objects that have the best characteristics in terms of signal-to-noise ratio and structure with increasing discreteness of navigation parameters. The method differs from known ones in using the image structure as information features along with the brightness and contrast of objects. This would reduce the number of fragments of reference images while maintaining the accuracy indicator. The results could be implemented in secondary processing systems of correlation-extreme navigation systems used on high-speed unmanned aerial vehicles

References

- Kharchenko, V., Mukhina, M. (2014). Correlation-extreme visual navigation of unmanned aircraft systems based on speed-up robust features. Aviation, 18 (2), 80–85. https://doi.org/10.3846/16487788.2014.926645

- Gao, H., Yu, Y., Huang, X., Song, L., Li, L., Li, L., Zhang, L. (2023). Enhancing the Localization Accuracy of UAV Images under GNSS Denial Conditions. Sensors, 23 (24), 9751. https://doi.org/10.3390/s23249751

- Yol, A., Delabarre, B., Dame, A., Dartois, J.-E., Marchand, E. (2014). Vision-based absolute localization for unmanned aerial vehicles. 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, 3429–3434. https://doi.org/10.1109/iros.2014.6943040

- Shan, M., Wang, F., Lin, F., Gao, Z., Tang, Y. Z., Chen, B. M. (2015). Google map aided visual navigation for UAVs in GPS-denied environment. 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO), 114–119. https://doi.org/10.1109/robio.2015.7418753

- Dalal, N., Triggs, B. (2005). Histograms of Oriented Gradients for Human Detection. 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), 1, 886–893. https://doi.org/10.1109/cvpr.2005.177

- Román, A., Heredia, S., Windle, A. E., Tovar-Sánchez, A., Navarro, G. (2024). Enhancing Georeferencing and Mosaicking Techniques over Water Surfaces with High-Resolution Unmanned Aerial Vehicle (UAV) Imagery. Remote Sensing, 16 (2), 290. https://doi.org/10.3390/rs16020290

- Zhao, X., Li, H., Wang, P., Jing, L. (2020). An Image Registration Method for Multisource High-Resolution Remote Sensing Images for Earthquake Disaster Assessment. Sensors, 20 (8), 2286. https://doi.org/10.3390/s20082286

- Tong, P., Yang, X., Yang, Y., Liu, W., Wu, P. (2023). Multi-UAV Collaborative Absolute Vision Positioning and Navigation: A Survey and Discussion. Drones, 7 (4), 261. https://doi.org/10.3390/drones7040261

- Ali, B., Sadekov, R. N., Tsodokova, V. V. (2022). A Review of Navigation Algorithms for Unmanned Aerial Vehicles Based on Computer Vision Systems. Gyroscopy and Navigation, 13 (4), 241–252. https://doi.org/10.1134/s2075108722040022

- Kan, E. M., Lim, M. H., Ong, Y. S., Tan, A. H., Yeo, S. P. (2012). Extreme learning machine terrain-based navigation for unmanned aerial vehicles. Neural Computing and Applications, 22 (3-4), 469–477. https://doi.org/10.1007/s00521-012-0866-9

- Yeromina, N., Tarshyn, V., Petrov, S., Samoylenko, V., Tabakova, I., Dmitriiev, O. et al. (2021). Method of reference image selection to provide high-speed aircraft navigation under conditions of rapid change of flight trajectory. International Journal of Advanced Technology and Engineering Exploration, 8 (85). https://doi.org/10.19101/ijatee.2021.874814

- Solonar, A. S., Tsuprik, S. V., Khmarskiy, P. A. (2023). Influence of the reference image formation method on the efficiency of the onboard correlation-extreme tracking system for tracking ground objects. Proceedings of the National Academy of Sciences of Belarus, Physical-Technical Series, 68 (2), 167–176. https://doi.org/10.29235/1561-8358-2023-68-2-167-176

- Zhang, X., He, Z., Ma, Z., Wang, Z., Wang, L. (2021). LLFE: A Novel Learning Local Features Extraction for UAV Navigation Based on Infrared Aerial Image and Satellite Reference Image Matching. Remote Sensing, 13 (22), 4618. https://doi.org/10.3390/rs13224618

- Abdollahi, A., Pradhan, B. (2021). Integrated technique of segmentation and classification methods with connected components analysis for road extraction from orthophoto images. Expert Systems with Applications, 176, 114908. https://doi.org/10.1016/j.eswa.2021.114908

- Yeromina, N., Udovovenko, S., Tiurina, V., Boichenko, О., Breus, P., Onishchenko, Y. et al. (2023). Segmentation of Images Used in Unmanned Aerial Vehicles Navigation Systems. Problems of the Regional Energetics, 4 (60), 30–42. https://doi.org/10.52254/1857-0070.2023.4-60.03

- Nuradili, P., Zhou, G., Zhou, J., Wang, Z., Meng, Y., Tang, W., Melgani, F. (2024). Semantic segmentation for UAV low-light scenes based on deep learning and thermal infrared image features. International Journal of Remote Sensing, 45 (12), 4160–4177. https://doi.org/10.1080/01431161.2024.2357842

- Xi, W., Shi, Z., Li, D. (2017). Comparisons of feature extraction algorithm based on unmanned aerial vehicle image. Open Physics, 15 (1), 472–478. https://doi.org/10.1515/phys-2017-0053

- Li, X., Li, Y., Ai, J., Shu, Z., Xia, J., Xia, Y. (2023). Semantic segmentation of UAV remote sensing images based on edge feature fusing and multi-level upsampling integrated with Deeplabv3+. PLOS ONE, 18 (1), e0279097. https://doi.org/10.1371/journal.pone.0279097

- Spasev, V., Dimitrovski, I., Chorbev, I., Kitanovski, I. (2025). Semantic Segmentation of Unmanned Aerial Vehicle Remote Sensing Images Using SegFormer. Intelligent Systems and Pattern Recognition, 108–122. https://doi.org/10.1007/978-3-031-82156-1_9

- Sahragard, E., Farsi, H., Mohamadzadeh, S. (2024). Semantic Segmentation of Aerial Imagery: A Novel Approach Leveraging Hierarchical Multi-scale Features and Channel-based Attention for Drone Applications. International Journal of Engineering, 37 (5), 1022–1035. https://doi.org/10.5829/ije.2024.37.05b.18

- Lu, Z., Qi, L., Zhang, H., Wan, J., Zhou, J. (2022). Image Segmentation of UAV Fruit Tree Canopy in a Natural Illumination Environment. Agriculture, 12 (7), 1039. https://doi.org/10.3390/agriculture12071039

- Wang, Z., Zhao, D., Cao, Y. (2022). Image Quality Enhancement with Applications to Unmanned Aerial Vehicle Obstacle Detection. Aerospace, 9 (12), 829. https://doi.org/10.3390/aerospace9120829

- Simantiris, G., Panagiotakis, C. (2024). Unsupervised Color-Based Flood Segmentation in UAV Imagery. Remote Sensing, 16 (12), 2126. https://doi.org/10.3390/rs16122126

- Li, J., Wu, Y., Zhang, H., Wang, H. (2023). A Novel Unsupervised Segmentation Method of Canopy Images from UAV Based on Hybrid Attention Mechanism. Electronics, 12 (22), 4682. https://doi.org/10.3390/electronics12224682

- Zhang, X., Du, B., Wu, Z., Wan, T. (2022). LAANet: lightweight attention-guided asymmetric network for real-time semantic segmentation. Neural Computing and Applications, 34 (5), 3573–3587. https://doi.org/10.1007/s00521-022-06932-z

- Song, Y., Shang, C., Zhao, J. (2023). LBCNet: A lightweight bilateral cascaded feature fusion network for real-time semantic segmentation. The Journal of Supercomputing, 80 (6), 7293–7315. https://doi.org/10.1007/s11227-023-05740-z

- Sotnikov, A., Tiurina, V., Petrov, K., Lukyanova, V., Lanovyy, O., Onishchenko, Y. et al. (2024). Using the set of informative features of a binding object to construct a decision function by the system of technical vision when localizing mobile robots. Eastern-European Journal of Enterprise Technologies, 3 (9 (129)), 60–69. https://doi.org/10.15587/1729-4061.2024.303989

- Sotnikov, O., Tymochko, O., Bondarchuk, S., Dzhuma, L., Rudenko, V., Mandryk, Ya. et al. (2023). Generating a Set of Reference Images for Reliable Condition Monitoring of Critical Infrastructure using Mobile Robots. Problems of the Regional Energetics, 2 (58), 41–51. https://doi.org/10.52254/1857-0070.2023.2-58.04

- Sotnikov, O., Sivak, V., Pavlov, Ya., Нashenko, S., Borysenko, T., Torianyk, D. (2024). Selection of the Binding Object on the Current Image Formed by the Technical Vision System Using Structural and Geometric Features. Problems of the Regional Energetics, 3 (63), 92–103. https://doi.org/10.52254/1857-0070.2024.3-63.08

- Pan, Z., Xu, J., Guo, Y., Hu, Y., Wang, G. (2020). Deep Learning Segmentation and Classification for Urban Village Using a Worldview Satellite Image Based on U-Net. Remote Sensing, 12 (10), 1574. https://doi.org/10.3390/rs12101574

- Porev, V. A. (2015). Televiziyni informatsiyno-vymiriuvalni systemy. Kyiv, 218.

- Balytska, N., Prylypko, O., Shostachuk, A., Hlembotska, L., Melnyk, O. (2023). Analysis of correlations between the fractal dimension and parameters of milled surface roughness. Technical Engineering, 1 (91), 26–33. https://doi.org/10.26642/ten-2023-1(91)-26-33

- Berezskij, O. N., Berezskaja, K. M. (2015). Quantified Estimation of Image Segmentation Quality Based on Metrics. Control systems and machines, 6, 59–65. Available at: http://jnas.nbuv.gov.ua/article/UJRN-0000515848

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Alexander Sotnikov, Ruslan Sydorenko, Serhii Mykus, Serhii Zakirov, Ihor Vlasov, Oleksandr Shkvarskyi, Yuriy Samsonov, Andrii Petik, Andrii Nechaus, Oleh Rikunov

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.