Recognition of aerial photography objects based on data sets with different aggregation of classes

DOI:

https://doi.org/10.15587/1729-4061.2023.272951Keywords:

recognition of aerial photography objects, classification of data sets, recognition accuracy, neural network of the ConvNets groupAbstract

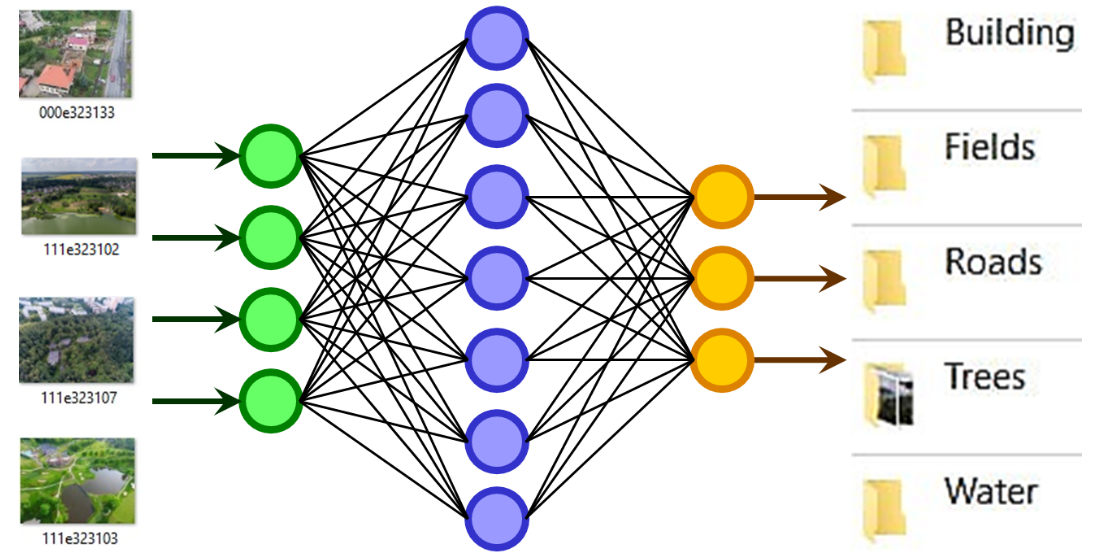

The object of this work is the recognition algorithms of aerial photography objects, namely, the analysis of recognition accuracy based on data sets with different aggregation classes.

To solve this problem, an information system for object recognition based on aerial photography data has been developed. An architecture based on neural network architectures of the ConvNets group with structural modifications was chosen and used to create the information system. The use of a convolutional neural network of the ConvNets group in the architecture of the information system for the recognition of objects of aerial photography gives high accuracy rates when training the information system and validating its results. But the authors did not find any studies on the learning of the neural network of the ConvNets group. Therefore, it was decided to conduct an analysis in which case the ConvNets network will provide validation results with higher accuracy when the training takes place on datasets with or without class aggregation.

The authors performed an analysis of the accuracy of recognition of aerial photography objects based on data sets with different aggregation classes. The dataset used for neural network training consisted of 3-channel labeled images of 64x64 pixels size. Based on the analysis, the optimal number of epochs for training is selected, which makes it possible to recognize aerial photography objects with greater accuracy and speed. It was concluded that greater accuracy in image classification is achieved for sampling without crossing data from different classes (without aggregation of classes). The result of the work is recommended for use in the automation of dataset filling and information filtering of visual images

References

- Abbas, A., Yadav, V., Smith, E., Ramjas, E., Rutter, S. B., Benavidez, C. et al. (2021). Computer Vision-Based Assessment of Motor Functioning in Schizophrenia: Use of Smartphones for Remote Measurement of Schizophrenia Symptomatology. Digital Biomarkers, 5 (1), 29–36. doi: https://doi.org/10.1159/000512383

- Minz, P. S., Saini, C. S. (2021). Comparison of computer vision system and colour spectrophotometer for colour measurement of mozzarella cheese. Applied Food Research, 1 (2), 100020. doi: https://doi.org/10.1016/j.afres.2021.100020

- Gao, S., Guan, H., Ma, X. (2022). A recognition method of multispectral images of soybean canopies based on neural network. Ecological Informatics, 68, 101538. doi: https://doi.org/10.1016/j.ecoinf.2021.101538

- Prystavka, P., Dukhnovska, K., Kovtun, O., Leshchenko, O., Cholyshkina, O., Zhultynska, A. (2021). Devising information technology for determining the redundant information content of a digital image. Eastern-European Journal of Enterprise Technologies, 6 (2 (114)), 59–70. doi: https://doi.org/10.15587/1729-4061.2021.248698

- Appiah, O., Asante, M., Hayfron-Acquah, J. B. (2022). Improved approximated median filter algorithm for real-time computer vision applications. Journal of King Saud University - Computer and Information Sciences, 34 (3), 782–792. doi: https://doi.org/10.1016/j.jksuci.2020.04.005

- Liu, L., Chen, C. L. P., Zhou, Y., You, X. (2015). A new weighted mean filter with a two-phase detector for removing impulse noise. Information Sciences, 315, 1–16. doi: https://doi.org/10.1016/j.ins.2015.03.067

- Belattar, S., Abdoun, O., Haimoudi, E. K. (2022). A Novel Strategy for Improving the Counter Propagation Artificial Neural Networks in Classification Tasks. Journal of Communications Software and Systems, 18 (1), 17–27. doi: https://doi.org/10.24138/jcomss-2021-0121

- Kravchenko, Y., Leshchenko, O., Dakhno, N., Deinega, V., Shevchenko, H., Trush, O. (2020). Intellectual Fuzzy System Air Pollution Control. 2020 IEEE 2nd International Conference on Advanced Trends in Information Theory (ATIT). doi: https://doi.org/10.1109/atit50783.2020.9349334

- Mahdianpari, M., Salehi, B., Rezaee, M., Mohammadimanesh, F., Zhang, Y. (2018). Very Deep Convolutional Neural Networks for Complex Land Cover Mapping Using Multispectral Remote Sensing Imagery. Remote Sensing, 10 (7), 1119. doi: https://doi.org/10.3390/rs10071119

- Prystavka, P., Dolgikh, S., Kozachuk, O. (2022). Terrain Image Recognition with Unsupervised Generative Representations: the Effect of Anomalies. 2022 12th International Conference on Advanced Computer Information Technologies (ACIT). doi: https://doi.org/10.1109/acit54803.2022.9913178

- Muhtasim, D. A., Pavel, M. I., Tan, S. Y. (2022). A Patch-Based CNN Built on the VGG-16 Architecture for Real-Time Facial Liveness Detection. Sustainability, 14 (16), 10024. doi: https://doi.org/10.3390/su141610024

- Zhang, H., Luo, X. (2022). The Role of Knowledge Creation-Oriented Convolutional Neural Network in Learning Interaction. Computational Intelligence and Neuroscience, 2022, 1–12. doi: https://doi.org/10.1155/2022/6493311

- Hong, M., Choe, Y. (2019). Wasserstein Generative Adversarial Network Based De-Blurring Using Perceptual Similarity. Applied Sciences, 9 (11), 2358. doi: https://doi.org/10.3390/app9112358

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2023 Pylyp Prystavka, Kseniia Dukhnovska, Oksana Kovtun, Olga Leshchenko, Olha Cholyshkina, Vadym Semenov

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.