Using the set of informative features of a binding object to construct a decision function by the system of technical vision when localizing mobile robots

DOI:

https://doi.org/10.15587/1729-4061.2024.303989Keywords:

mobile robot, decision function, informative features, quantization thresholds, signal-to-noise ratioAbstract

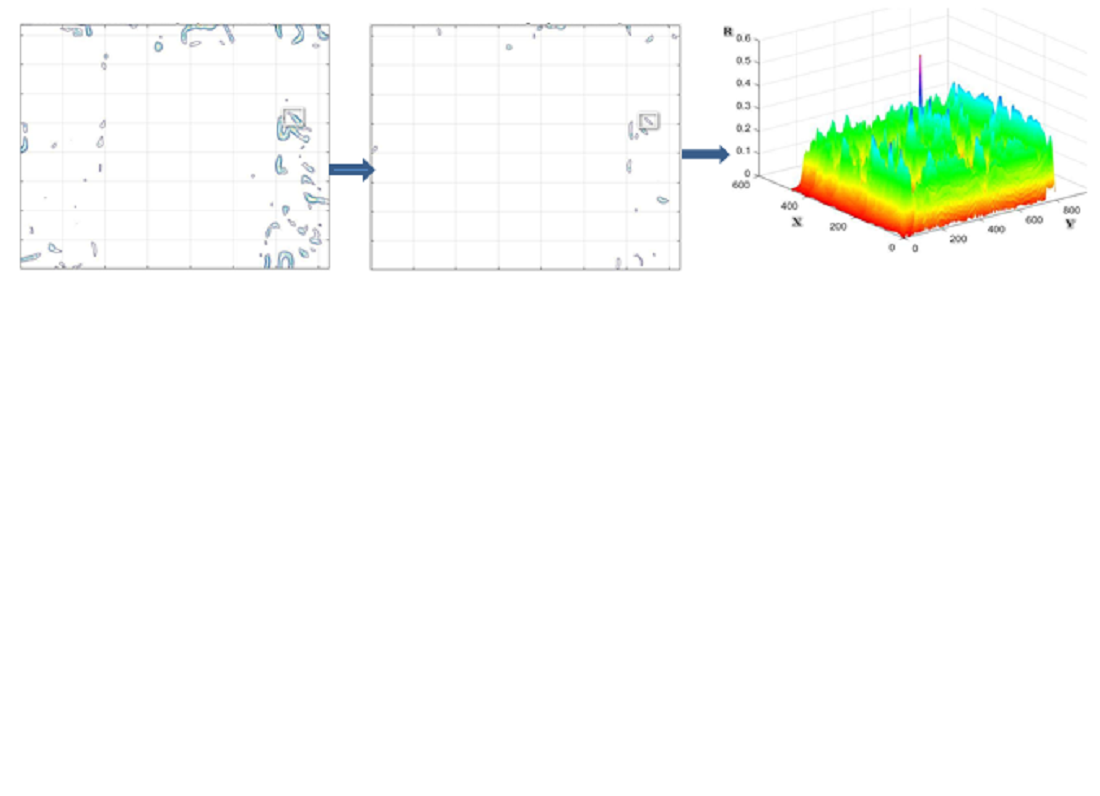

The object of this study is the process of constructing a decision function by the optical-electronic system of technical vision under the conditions of the influence of obstacles on the current image, which is formed in the process of localization of a mobile robot. The paper reports the results of solving the problem of constructing a decision function when reducing the signal-to-noise ratio of the current image by using a set of informative features for the selection of the binding object, namely, brightness, contrast, and its area. The selection of the binding object is proposed to be carried out by choosing the appropriate values of the quantization thresholds of the current image for the selected informative features, taking into account the signal-to-noise ratio, which provides the necessary probability of object selection. The dependence of the object selection probability on the selected values of the quantization thresholds was established. The use of the results could enable the construction of a unimodal decision function when localizing mobile robots on imaging surfaces with weakly pronounced brightness and contrast characteristics of objects, as well as with their small geometric dimensions. By modeling, the probability of forming a decision function was estimated depending on the degree of noise of the current images. It is shown that the application of the proposed approach allows selection of objects with a probability ranging from 0.78 to 0.99 for the values of the signal-to-noise ratio of images formed by the technical vision system under real conditions. The method to construct a decision function under the influence of interference could be implemented in information processing algorithms used in optical-electronic technical vision systems for the navigation of unmanned aerial vehicles

References

- Sotnikov, O., Tymochko, O., Bondarchuk, S., Dzhuma, L., Rudenko, V., Mandryk, Ya. et al. (2023). Generating a Set of Reference Images for Reliable Condition Monitoring of Critical Infrastructure using Mobile Robots. Problems of the Regional Energetics, 2 (58), 41–51. https://doi.org/10.52254/1857-0070.2023.2-58.04

- Sotnikov, O., Kartashov, V. G., Tymochko, O., Sergiyenko, O., Tyrsa, V., Mercorelli, P., Flores-Fuentes, W. (2019). Methods for Ensuring the Accuracy of Radiometric and Optoelectronic Navigation Systems of Flying Robots in a Developed Infrastructure. Machine Vision and Navigation, 537–577. https://doi.org/10.1007/978-3-030-22587-2_16

- Volkov, V. Yu. (2017). Adaptive Extraction of Small Objects in Digital Images. Izvestiya Vysshikh Uchebnykh Zavedenii Rossii. Radioelektronika [Journal of the Russian Universities. Radioelectronics], 1, 17–28.

- Volkov, V. Yu., Turnetskiy, L. S. (2009). Porogovaya obrabotka dlya segmentatsii i vydeleniya protyazhennyh obektov na tsifrovyh izobrazheniyah. Informatsionno-upravlyayushchie sistemy, 5 (42), 10–13.

- Fursov, V., Bibikov, S., Yakimov, P. (2013). Localization of objects contours with different scales in images using hough transform. Computer Optics, 37 (4), 496–502. https://doi.org/10.18287/0134-2452-2013-37-4-496-502

- Abdollahi, A., Pradhan, B. (2021). Integrated technique of segmentation and classification methods with connected components analysis for road extraction from orthophoto images. Expert Systems with Applications, 176, 114908. https://doi.org/10.1016/j.eswa.2021.114908

- Bakhtiari, H. R. R., Abdollahi, A., Rezaeian, H. (2017). Semi automatic road extraction from digital images. The Egyptian Journal of Remote Sensing and Space Science, 20 (1), 117–123. https://doi.org/10.1016/j.ejrs.2017.03.001

- Yeromina, N., Petrov, S., Tantsiura, A., Iasechko, M., Larin, V. (2018). Formation of reference images and decision function in radiometric correlationextremal navigation systems. Eastern-European Journal of Enterprise Technologies, 4 (9 (94)), 27–35. https://doi.org/10.15587/1729-4061.2018.139723

- Sotnikov, A., Tarshyn, V., Yeromina, N., Petrov, S., Antonenko, N. (2017). A method for localizing a reference object in a current image with several bright objects. Eastern-European Journal of Enterprise Technologies, 3 (9 (87)), 68–74. https://doi.org/10.15587/1729-4061.2017.101920

- Tsvetkov, O., Tananykina, L. (2015). A preprocessing method for correlation-extremal systems. Computer Optics, 39 (5), 738–743. https://doi.org/10.18287/0134-2452-2015-39-5-738-743

- Senthilnath, J., Rajeshwari, M., Omkar, S. N. (2009). Automatic road extraction using high resolution satellite image based on texture progressive analysis and normalized cut method. Journal of the Indian Society of Remote Sensing, 37 (3), 351–361. https://doi.org/10.1007/s12524-009-0043-5

- Zhang, X., Du, B., Wu, Z., Wan, T. (2022). LAANet: lightweight attention-guided asymmetric network for real-time semantic segmentation. Neural Computing and Applications, 34 (5), 3573–3587. https://doi.org/10.1007/s00521-022-06932-z

- Song, Y., Shang, C., Zhao, J. (2023). LBCNet: A lightweight bilateral cascaded feature fusion network for real-time semantic segmentation. The Journal of Supercomputing, 80 (6), 7293–7315. https://doi.org/10.1007/s11227-023-05740-z

- Tarshyn, V. A., Sotnikov, A. M., Sydorenko, R. G., Megelbey, V. V. (2015). Preparation of reference patterns for high-fidelity correlation-extreme navigation systems on basis of forming of paul fractal dimensions. Systemy ozbroiennia i viyskova tekhnika, 2, 142–144. Available at: http://nbuv.gov.ua/UJRN/soivt_2015_2_38

- Abeysinghe, W., Wong, M., Hung, C.-C., Bechikh, S. (2019). Multi-Objective Evolutionary Algorithm for Image Segmentation. 2019 SoutheastCon. https://doi.org/10.1109/southeastcon42311.2019.9020457

- Grinias, I., Panagiotakis, C., Tziritas, G. (2016). MRF-based segmentation and unsupervised classification for building and road detection in peri-urban areas of high-resolution satellite images. ISPRS Journal of Photogrammetry and Remote Sensing, 122, 145–166. https://doi.org/10.1016/j.isprsjprs.2016.10.010

- Bai, H., Cheng, J., Su, Y., Wang, Q., Han, H., Zhang, Y. (2022). Multi-Branch Adaptive Hard Region Mining Network for Urban Scene Parsing of High-Resolution Remote-Sensing Images. Remote Sensing, 14 (21), 5527. https://doi.org/10.3390/rs14215527

- Sambaturu, B., Gupta, A., Jawahar, C. V., Arora, C. (2023). ScribbleNet: Efficient interactive annotation of urban city scenes for semantic segmentation. Pattern Recognition, 133, 109011. https://doi.org/10.1016/j.patcog.2022.109011

- Khudov, H., Makoveichuk, O., Butko, I., Gyrenko, I., Stryhun, V., Bilous, O. et al. (2022). Devising a method for segmenting camouflaged military equipment on images from space surveillance systems using a genetic algorithm. Eastern-European Journal of Enterprise Technologies, 3 (9 (117)), 6–14. https://doi.org/10.15587/1729-4061.2022.259759

- Körting, T. S., Fonseca, L. M. G., Dutra, L. V., Silva, F. C. (2010). Image re-segmentation applied to urban imagery. The International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, XXXVII, B3b, 393–398. https://doi.org/10.13140/2.1.5133.9529

- Dikmen, M., Halici, U. (2014). A Learning-Based Resegmentation Method for Extraction of Buildings in Satellite Images. IEEE Geoscience and Remote Sensing Letters, 11 (12), 2150–2153. https://doi.org/10.1109/lgrs.2014.2321658

- Ruban, I., Khudov, H., Makoveichuk, O., Khizhnyak, I., Lukova-Chuiko, N., Pevtsov, H. et al. (2019). Method for determining elements of urban infrastructure objects based on the results from air monitoring. Eastern-European Journal of Enterprise Technologies, 4 (9 (100)), 52–61. https://doi.org/10.15587/1729-4061.2019.174576

- Khudov, H., Khudov, V., Yuzova, I., Solomonenko, Y., Khizhnyak, I. (2021). The Method of Determining the Elements of Urban Infrastructure Objects Based on Hough Transformation. Studies in Systems, Decision and Control, 247–265. https://doi.org/10.1007/978-3-030-87675-3_15

- Yeromina, N., Udovovenko, S., Tiurina, V., Boichenko, О., Breus, P., Onishchenko, Y. et al. (2023). Segmentation of Images Used in Unmanned Aerial Vehicles Navigation Systems. Problems of the Regional Energetics, 4 (60), 30–42. https://doi.org/10.52254/1857-0070.2023.4-60.03

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2024 Alexander Sotnikov, Valeriia Tiurina, Konstantin Petrov, Viktoriia Lukyanova, Oleksiy Lanovyy, Yurii Onishchenko, Yurii Gnusov, Serhii Petrov, Оleksii Boichenko, Pavlo Breus

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.