Визначення ефективності GPT-4.1-mini для багатокласової категоризації тексту англійською та українською мовами

DOI:

https://doi.org/10.15587/1729-4061.2025.340492Ключові слова:

аналіз даних, велика мовна модель, GPT-4.1-mini, категоризація тексту, багатомовне оцінювання, українська моваАнотація

Об’єктом дослідження є процес багатокласової автоматичної категоризації користувацьких запитань за допомогою великих мовних моделей в умовах переходу з англійської мови на українську.

Наукова проблема полягає в тому, що більшість сучасних великих мовних моделей (LLM) оптимізовані для англійської мови, тоді як ефективність їхнього застосування до морфологічно складних і ресурсно обмежених мов, зокрема української, залишається недостатньо вивченою.

У роботі розроблено та реалізовано експериментальний підхід до оцінювання переносимості моделі GPT-4.1-mini з англійської на українську мову в задачі класифікації 11 047 користувацьких запитань, що охоплюють дев’ять прикладних доменів. Для аналізу застосовано традиційні метрики (Recall, Precision, Weighted-F₁, Macro-F₁) та новий показник Uncertainty/Error Rate (U/E), який характеризує частку відмов і «галюцинацій» моделі.

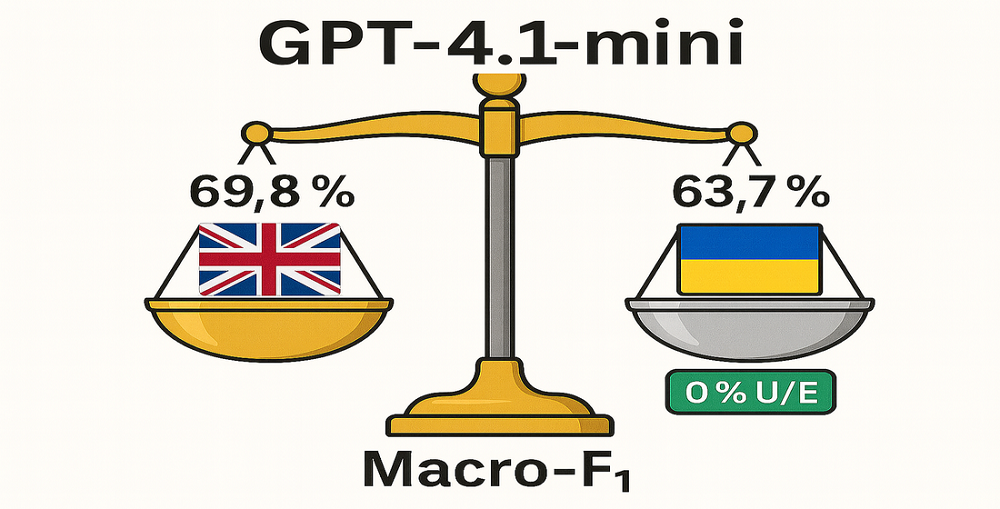

Результати дослідження показали, що найвища якість досягається на англомовному наборі (Macro-F₁ = 69.78%, U/E = 0.05%). При використанні україномовних промптів Macro-F₁ знизився до 63.73%, проте U/E дорівнював 0%, що свідчить про підвищену надійність відповідей. Використання англомовних промптів для україномовних даних дозволило зберегти майже незмінний рівень точності (Macro-F₁ = 69.66%), демонструючи сильні механізми внутрішнього перекладу та узагальнення.

Особливістю роботи є використання великого багатодоменного паралельного корпусу, зіставлення промптів двома мовами, використання нової моделі GPT-4.1-mini та введення метрики U/E як критерію надійності. Запропонований підхід довів можливість використання моделі GPT-4.1-mini для україномовних інформаційних сервісів без додаткового навчання, зокрема для автоматичної маршрутизації запитів у фінансовому, медичному, юридичному та інших доменах

Посилання

- Huang, J., Xu, Y., Wang, Q., Wang, Q. (Cheems), Liang, X., Wang, F. et al. (2025). Foundation models and intelligent decision-making: Progress, challenges, and perspectives. The Innovation, 6 (6), 100948. https://doi.org/10.1016/j.xinn.2025.100948

- Doddapaneni, S., Ramesh, G., Khapra, M., Kunchukuttan, A., Kumar, P. (2025). A Primer on Pretrained Multilingual Language Models. ACM Computing Surveys, 57 (9), 1–39. https://doi.org/10.1145/3727339

- Yermolenko, S. (2019). From the history of Ukrainian stylistics: from stylistics of languages to integrative stylistics. Ukrainska Mova, 1, 3–17. https://doi.org/10.15407/ukrmova2019.01.003

- Zakon Ukrainy «Pro zabezpechennia funktsionuvannia ukrainskoi movy yak derzhavnoi» No. 2704-VIII. Verkhovna Rada Ukrainy. Available at: https://zakon.rada.gov.ua/laws/show/2704-19

- Syromiatnikov, M. V., Ruvinskaya, V. M., Troynina, A. S. (2024). ZNO-Eval: Benchmarking reasoning capabilities of large language models in Ukrainian. Informatics. Culture. Technology, 1 (1), 186–191. https://doi.org/10.15276/ict.01.2024.27

- Mitsa, O., Voloshchuk, Y., Levchuk, O., Petsko, V. (2025). A Comparative Study of Machine Learning Algorithms and the Prompting Approach Using GPT-3.5 Turbo for Text Categorization. Advances in Computer Science for Engineering and Education VII, 156–167. https://doi.org/10.1007/978-3-031-84228-3_13

- Voloshchuk, Y. O., Mitsa, O. V. (2024). Comparison of text categorization efficiency using the prompting approach with GPT-3.5-turbo and GPT-4-turbo. Science and Technology Today, 6 (34), 768–777. https://doi.org/10.52058/2786-6025-2024-6(34)-768-777

- Garrachón Ruiz, A., de La Rosa, T., Borrajo, D. (2025). TRIM: Token Reduction and Inference Modeling for Cost-Effective Language Generation. arXiv. https://doi.org/10.48550/arXiv.2412.07682

- Chen, L., Zaharia, M., Zou, J. (2024). FrugalGPT: How to use large language models while reducing cost and improving performance. Transactions on Machine Learning Research. Available at: https://openreview.net/forum?id=cSimKw5p6R

- Wang, Z., Pang, Y., Lin, Y., Zhu, X. (2024). Adaptable and Reliable Text Classification using Large Language Models. 2024 IEEE International Conference on Data Mining Workshops (ICDMW), 67–74. https://doi.org/10.1109/icdmw65004.2024.00015

- Panchenko, D., Maksymenko, D., Turuta, O., Yerokhin, A., Daniiel, Y., Turuta, O. (2022). Evaluation and Analysis of the NLP Model Zoo for Ukrainian Text Classification. Information and Communication Technologies in Education, Research, and Industrial Applications, 109–123. https://doi.org/10.1007/978-3-031-20834-8_6

- Panchenko, D., Maksymenko, D., Turuta, O., Luzan, M., Tytarenko, S., Turuta, O. (2022). Ukrainian News Corpus as Text Classification Benchmark. ICTERI 2021 Workshops, 550–559. https://doi.org/10.1007/978-3-031-14841-5_37

- Wang, Y., Wang, W., Chen, Q., Huang, K., Nguyen, A., De, S. (2024). Zero-shot text classification with knowledge resources under label-fully-unseen setting. Neurocomputing, 610, 128580. https://doi.org/10.1016/j.neucom.2024.128580

- Ulčar, M., Žagar, A., Armendariz, C. S., Repar, A., Pollak, S., Purver, M., Robnik-Šikonja, M. (2026). Mono- and cross-lingual evaluation of representation language models on less-resourced languages. Computer Speech & Language, 95, 101852. https://doi.org/10.1016/j.csl.2025.101852

- Han, B., Yang, S. T., LuVogt, C. (2025). Cross-Lingual Text Classification with Large Language Models. Companion Proceedings of the ACM on Web Conference 2025, 1005–1008. https://doi.org/10.1145/3701716.3715567

- Lai, V., Ngo, N., Pouran Ben Veyseh, A., Man, H., Dernoncourt, F., Bui, T., Nguyen, T. (2023). ChatGPT Beyond English: Towards a Comprehensive Evaluation of Large Language Models in Multilingual Learning. Findings of the Association for Computational Linguistics: EMNLP 2023. https://doi.org/10.18653/v1/2023.findings-emnlp.878

- Prytula, M. (2024). Fine-tuning BERT, DistilBERT, XLM-RoBERTa and Ukr-RoBERTa models for sentiment analysis of Ukrainian language reviews. Artificial Intelligence, 2, 85–97. https://doi.org/10.15407/jai2024.02.085

- Dementieva, D., Khylenko, V., Groh, G. (2025). Cross-lingual text classification transfer: The case of Ukrainian. arXiv. https://doi.org/10.48550/arXiv.2404.02043

- Hamotskyi, І., Levbarg, A., Hänig, C. (2024). Eval-UA-tion 1.0: Benchmark for Evaluating Ukrainian (Large) Language Models. UNLP 2024. Available at: https://hal.science/hal-04534651v2

- Voloshchuk, Yu., Mitsa, O. (2024). Otsinka stabilnosti rezultativ katehoryzatsiyi tekstu z vykorystanniam prompting pidkhodu z velykymy movnymy modeliamy. Materialy konferentsii MTsND. https://doi.org/10.62731/mcnd-21.06.2024.002

- GPT-4.1. OpenAI. Available at: https://openai.com/index/gpt-4-1/

- Pricing. OpenAI. Available at: https://platform.openai.com/docs/pricing

- Voloshchuk, Yu., Mitsa, O. (2024). Porivniannia prompting pidkhodiv z vykorystanniam gpt 4-turbo dlia tekstovoi katehoryzatsiyi. Materialy konferentsii MTsND. https://doi.org/10.62731/mcnd-14.06.2024.006

- Ahia, O., Kumar, S., Gonen, H., Kasai, J., Mortensen, D., Smith, N., Tsvetkov, Y. (2023). Do All Languages Cost the Same? Tokenization in the Era of Commercial Language Models. Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, 9904–9923. https://doi.org/10.18653/v1/2023.emnlp-main.614

- Li, X., Zhang, K. (2025). Heterogeneous Graph Neural Network with Multi-View Contrastive Learning for Cross-Lingual Text Classification. Applied Sciences, 15 (7), 3454. https://doi.org/10.3390/app15073454

- Gui, A., Xiao, H. (2024). Multi-level multilingual semantic alignment for zero-shot cross-lingual transfer learning. Neural Networks, 173, 106217. https://doi.org/10.1016/j.neunet.2024.106217

- Huang, K., Shi, Y., Ding, D., Li, Y., Fei, Y., Lakshmanan, L., Xiao, X. (2025). ThriftLLM: On Cost-Effective Selection of Large Language Models for Classification Queries. Proceedings of the VLDB Endowment, 18 (11), 4410–4423. https://doi.org/10.14778/3749646.3749702

- Mitsa, O., Sharkan, V., Maksymchuk, V., Varha, S., Shkurko, H. (2023). Ethnocultural, Educational and Scientific Potential of the Interactive Dialects Map. 2023 IEEE International Conference on Smart Information Systems and Technologies (SIST), 226–231. https://doi.org/10.1109/sist58284.2023.10223544

- Kotsovsky, V. (2024). Learning of Multi-valued Multithreshold Neural Units. Proceedings of the 8th International Conference on Computational Linguistics and Intelligent Systems. Volume III: Intelligent Systems Workshop. https://doi.org/10.31110/colins/2024-3/004

- Kotsovsky, V., Batyuk, A. (2024). Towards the Design of Bithreshold ANN Regressor. 2024 IEEE 19th International Conference on Computer Science and Information Technologies (CSIT), 1–4. https://doi.org/10.1109/csit65290.2024.10982560

- Kotsovsky, V. (2025). Multithreshold neurons with smoothed activation functions. Proceedings of the Intelligent Systems Workshop at 9th International Conference on Computational Linguistics and Intelligent Systems (CoLInS-2025). https://doi.org/10.31110/colins/2025-2/007

- Lupei, M., Mitsa, O., Sharkan, V., Vargha, S., Gorbachuk, V. (2022). The Identification of Mass Media by Text Based on the Analysis of Vocabulary Peculiarities Using Support Vector Machines. 2022 International Conference on Smart Information Systems and Technologies (SIST), 1–6. https://doi.org/10.1109/sist54437.2022.9945774

##submission.downloads##

Опубліковано

Як цитувати

Номер

Розділ

Ліцензія

Авторське право (c) 2025 Yurii Voloshchuk, Oleksandr Mitsa

Ця робота ліцензується відповідно до Creative Commons Attribution 4.0 International License.

Закріплення та умови передачі авторських прав (ідентифікація авторства) здійснюється у Ліцензійному договорі. Зокрема, автори залишають за собою право на авторство свого рукопису та передають журналу право першої публікації цієї роботи на умовах ліцензії Creative Commons CC BY. При цьому вони мають право укладати самостійно додаткові угоди, що стосуються неексклюзивного поширення роботи у тому вигляді, в якому вона була опублікована цим журналом, але за умови збереження посилання на першу публікацію статті в цьому журналі.

Ліцензійний договір – це документ, в якому автор гарантує, що володіє усіма авторськими правами на твір (рукопис, статтю, тощо).

Автори, підписуючи Ліцензійний договір з ПП «ТЕХНОЛОГІЧНИЙ ЦЕНТР», мають усі права на подальше використання свого твору за умови посилання на наше видання, в якому твір опублікований. Відповідно до умов Ліцензійного договору, Видавець ПП «ТЕХНОЛОГІЧНИЙ ЦЕНТР» не забирає ваші авторські права та отримує від авторів дозвіл на використання та розповсюдження публікації через світові наукові ресурси (власні електронні ресурси, наукометричні бази даних, репозитарії, бібліотеки тощо).

За відсутності підписаного Ліцензійного договору або за відсутністю вказаних в цьому договорі ідентифікаторів, що дають змогу ідентифікувати особу автора, редакція не має права працювати з рукописом.

Важливо пам’ятати, що існує і інший тип угоди між авторами та видавцями – коли авторські права передаються від авторів до видавця. В такому разі автори втрачають права власності на свій твір та не можуть його використовувати в будь-який спосіб.