Дослідження алгоритмів розпізнавання рукописних символів різних мов за допомогою моделі нейронних мереж KAN

DOI:

https://doi.org/10.31498/2225-6733.49.1.2024.321184Ключові слова:

оптичне розпізнавання символів, нейромережа, мережа Колмогоров-Арнольда, трансформерна архітектура, трансформер Колмогорова-Арнольда, раціональні функціїАнотація

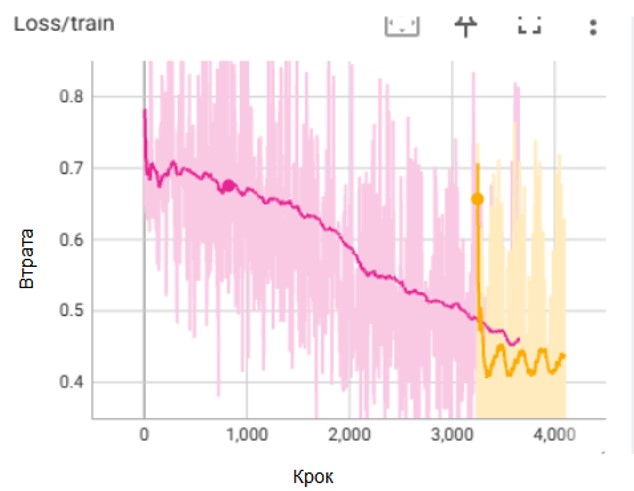

У роботі були проаналізовані найефективніші існуючі методи оптичного розпізнавання символів, які використовують у своїй структурі глибоке навчання нейромереж. У ході аналізу було виявлено, що сучасні архітектури нейромереж, з найкращими показниками точності розпізнавання, мають сталу межу точності. Також було встановлено, що кожна проаналізована архітектура нейромереж містить у своїй структурі багатошаровий перцептрон. Для оптимізації показників розпізнавання нейромереж було запропоновано використовувати мережу Колмогоров-Арнольда як альтернативу для мереж на основі багатошарового перцептрону. Архітектура створеної моделі заснована на двокомпонентному трансформері, перший компонент – візуальний трансформер, що використовується у якості кодера, другий – мовний трансформер, який використовується у якості декодера. Мережа Колмогоров-Арнольда замінює мережу прямого поширення на основі багатошарового перцептрону, у кожному трансформері – кодері та декодері. Поліпшення результатів існуючих нейромереж забезпечується за допомогою трансферного навчання, для чого у створеній моделі використовуються групові раціональні функції, у якості основних елементів мережі Колмогоров-Арнольда, що навчаються. Навчання моделі проводилося на наборах зображень рядків тексту з трьох різних систем письма: алфавітної, абуґіда та логографічної; які представлені писемностями: англійської, деванаґарі та китайської. В результаті експериментальних досліджень були виявлені високі показники розпізнавання символів для наборів даних китайської та деванаґарі, але низькі для англійської письменності, для моделі з мережею Колмогоров-Арнольда. Отримані результати свідчать про нові можливості підвищення надійності і ефективності сучасних систем розпізнавання рукописних текстів

Посилання

Plamondon R., Srihari S. N. Online and off-line handwriting recognition: a comprehensive survey. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2000. Vol. 22. Iss. 1. Pp. 63-84. DOI: https://doi.org/10.1109/34.824821.

Handwritten Recognition Techniques: A Comprehensive Review / H.A. Alhamad et al. Symmetry. 2024. Vol. 16. Article 681. DOI: https://doi.org/10.3390/sym16060681.

A Deep Learning Approach for Robust, Multi-oriented, and Curved Text Detection / R. Ranjbarzadeh et al. Cognitive Computation. 2024. Vol. 16. Pp. 1979-1991. DOI: https://doi.org/10.1007/s12559-022-10072-w.

Convolutional-Neural-Network-Based Handwritten Character Recognition: An Approach with Massive Multisource Data / Saqib N., Haque K.F., Yanambaka V.P., Abdelgawad A. Algorithms. 2022. Vol. 15. Article 129. DOI: https://doi.org/10.3390/a15040129.

Alzubi J., Anand N., Akshi K. Machine learning from theory to algorithms: an overview. Journal of physics: conference series. 2018. Vol. 1142. Pp. 1-15. DOI: https://doi.org/10.1088/1742-6596/1142/1/012012.

Zeiler M.D., Fergus R. Visualizing and understanding convolutional networks. Computer Vision – ECCV 2014: Proceedings of the 13th European Conference, Zurich, Switzerland, 6-12 September 2014. Pp. 818-833. DOI: https://doi.org/10.1007/978-3-319-10590-1_53.

Schilling F. The effect of batch normalization on deep convolutional neural networks : thesis of Master of Science – Computer Science. Stockholm, 2016. 102 p.

Laptev D., Buhmann J. M. Transformation-invariant convolutional jungles. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 07-12 June 2015. 2015. Pp. 3043-3051. DOI: https://doi.org/10.1109/CVPR.2015.7298923.

Beyond exploding and vanishing gradients: analysing RNN training using attractors and smoothness / Ribeiro A. H., Tiels K., Aguirre L. A., Schön T. Proceedings of the Twenty Third International Conference on Artificial Intelligence and Statistics, Palermo, Italy, 26-28 August 2020. Vol. 108. Pp. 2370-2380. DOI: https://doi.org/10.48550/arXiv.1906.08482.

Hochreiter S., Schmidhuber J. Long Short-Term Memory. Neural Computation. 1997. Vol. 9(8). Pp. 1735-1780. DOI: https://doi.org/10.1162/neco.1997.9.8.1735.

End-to-end sequence labeling via convolutional recurrent neural network with a connectionist temporal classification layer / X. Huang et al. International Journal of Computational Intelligence Systems. 2020. Vol. 13. Iss. 1. Pp. 341-351. DOI: https://doi.org/10.2991/ijcis.d.200316.001.

HTR-VT: Handwritten text recognition with vision transformer / Li Y., Chen D., Tang T., Shen X. Pattern Recognition. 2024. Vol. 158. Article 110967. DOI: https://doi.org/10.1016/j.patcog.2024.110967.

A light transformer-based architecture for handwritten text recognition / Barrere K., Soullard Y., Lemaitre A., Coüasnon B. International Workshop on Document Analysis Systems. 2022. Pp. 275-290. DOI: https://doi.org/10.1007/978-3-031-06555-2_19.

Attention is all you need / A. Vaswani et al. NIPS 2017 : 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 5-10 December 2017. Pp. 1-11. DOI: https://doi.org/10.48550/arXiv.1706.03762.

Swin transformer: Hierarchical vision transformer using shifted windows / L. Ze et al. Proceedings of the IEEE/CVF International conference on computer vision, Las Vegas, Nevada, USA, 10-17 Oct. 2021. Pp. 9992-10002. DOI: https://doi.org/10.1109/ICCV48922.2021.00986.

Mlp-mixer: An all-mlp architecture for vision / I.O. Tolstikhin et al. NIPS'21 : Proceedings of the 35th International Conference on Neural Information Processing Systems 6-14 December 2021. Pp. 24261-24272. DOI: https://doi.org/10.48550/arXiv.2105.01601.

Kan: Kolmogorov-Arnold networks / L. Ziming et al. 2024. Pp. 1-50. DOI: https://doi.org/10.48550/arXiv.2404.19756.

Yang X., Wang X. Kolmogorov-Arnold Transformer. 2024. Pp. 1-19. DOI: https://doi.org/10.48550/arXiv.2409.10594.

Molina A., Schramowski P., Kersting K. Padé Activation Units: End-to-end Learning of Flexible Activation Functions in Deep Networks. International Conference on Learning Representations, 26 April - 26 May 2020. Pp. 1-17. DOI: https://doi.org/10.48550/arXiv.1907.06732.

Online and offline handwritten Chinese character recognition: benchmarking on new databases / Liu C.-L., Yin F., Wang D.-H., Wang Q.-F. Pattern Recognition. 2013. Vol. 46. Iss. 1. Pp. 155-162. DOI: https://doi.org/10.1016/j.patcog.2012.06.021.

Offline Handwriting Recognition on Devanagari using a new Benchmark Dataset / Dutta K., Krishnan P., Mathew M., Jawahar C. V. 13th IAPR International Workshop on Document Analysis Systems (DAS), Vancouver, Canada, 24-27 April 2018. Pp. 25-30. DOI: https://doi.org/10.1109/DAS.2018.69.

Marti U-V., Bunke H. The IAM-database: an English sentence database for offline handwriting recognition. International journal on document analysis and recognition. 2002. Vol. 5. Pp. 39-46. DOI: https://doi.org/10.1007/s100320200071.

Fujitake M. Dtrocr: Decoder-only transformer for optical character recognition. Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 03-08 January 2024. Pp. 8010-8020. DOI: https://doi.org/10.1109/WACV57701.2024.00784.

##submission.downloads##

Опубліковано

Як цитувати

Номер

Розділ

Ліцензія

Ця робота ліцензується відповідно до Creative Commons Attribution 4.0 International License.

Журнал "Вісник Приазовського державного технічного університету. Серія: Технічні науки" видається під ліцензією СС-BY (Ліцензія «Із зазначенням авторства»).

Дана ліцензія дозволяє поширювати, редагувати, поправляти і брати твір за основу для похідних навіть на комерційній основі із зазначенням авторства. Це найзручніша з усіх пропонованих ліцензій. Рекомендується для максимального поширення і використання неліцензійних матеріалів.

Автори, які публікуються в цьому журналі, погоджуються з наступними умовами:

1. Автори залишають за собою право на авторство своєї роботи та передають журналу право першої публікації цієї роботи на умовах ліцензії Creative Commons Attribution License, яка дозволяє іншим особам вільно розповсюджувати опубліковану роботу з обов'язковим посиланням на авторів оригінальної роботи та першу публікацію роботи в цьому журналі.

2. Автори мають право укладати самостійні додаткові угоди, які стосуються неексклюзивного поширення роботи в тому вигляді, в якому вона була опублікована цим журналом (наприклад, розміщувати роботу в електронному сховищі установи або публікувати у складі монографії), за умови збереження посилання на першу публікацію роботи в цьому журналі.