Роль нового покоління систем зі штучним інтелектом у розвитку людини (на прикладі мережі ChatGPT)

DOI:

https://doi.org/10.31498/2225-6733.49.1.2024.321205Ключові слова:

штучний інтелект, ChatGPT, вектор зміни ентропії, суб’єктність ChatGPT, об’єктність користувачаАнотація

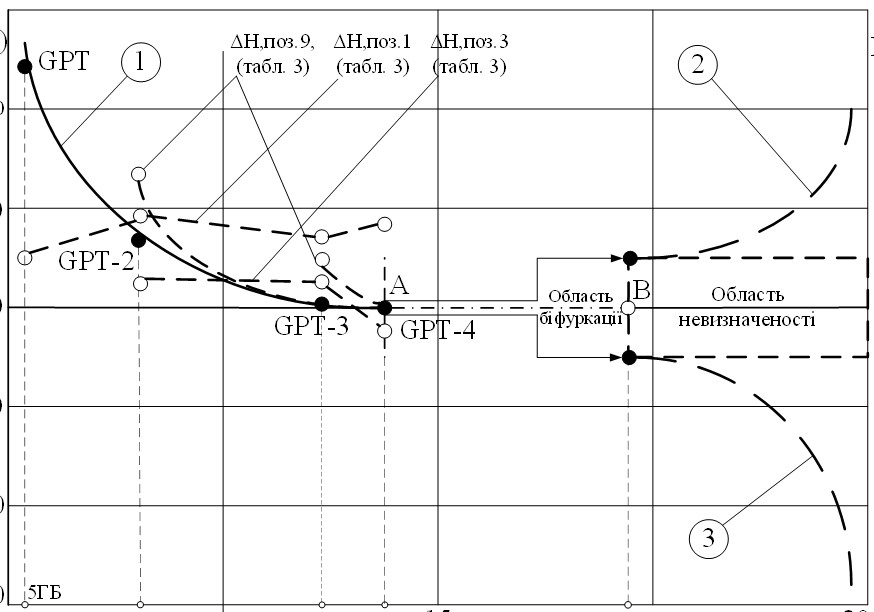

В роботі розглядається «pro-contra» у відносинах між людиною та машиною, маючи на увазі під останню системи штучного інтелекту (AI). Вперше увага приділяється такому аспекту питання, як суб'єктність і об'єктність частин системи «людина-машина». Такі критерії системи, як відношення до термодинамічної нерівноважності, вектори змінення ентропії, що відносяться як до людини, так і до систем AI, показують, що за існуючих умов та темпів розвитку AI, на прикладі GPT5 та GPT5, все більше переваг можна віднести до систем з AI. Людина поступово, і вже не вперше, втрачає пріоритети в конкуренції з «машиною». Така, здавалося б, непорушна людська якість, як когнітивність, все більше знаходить своє відображення в нових системах AI і, зокрема, в GPT. Такі його складові, як візуальність, чуттєвість і людський слух, поступово відображаються в «оцифрованих» функціях штучного інтелекту. Однією з нагальних причин таких змін є зміна ролі людини в системі «людина-машина-середовище» від її традиційної суб'єктивності до об'єктивності, поступова втрата можливостей впливу на системи AI. Показано, що головною метою є здатність до саморозвитку AI, освоєння нових знань за допомогою відомих на цьому етапі знань, що досягається простим збільшенням суперпам'яті та високою швидкістю її обробки за допомогою спеціалізованої регенеративної нейронної мережі «Трансформер». Це сприяє формуванню спеціалізованої логіки миттєвого перерахування варіантів, яка виявляється кращою за когнітивну вибіркову логіку людини і може означати, наприклад, перехід активності, а то й суб'єктивності, від людини до AI. Такий перехід може відбутися лише в одному передбачуваному випадку: коли ШІ знаходить внутрішні можливості конкурувати з людиною за її когнітивними якостями

Посилання

Crawford K. Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence. Yale University Press, 2021. 288 p. DOI: https://doi.org/10.2307/j.ctv1ghv45t.

Lanier J. Ten Arguments for Deleting Your Social Media Accounts Right Now. Henry Holt and Co., 2018. 160 p.

Dreyfus H. L. What Computers Still Can’t Do: A Critique of Artificial Reason. MIT Press. 1992. 408 p.

Chomsky N. The False Promise of ChatGPT. 2023. URL: https://www.nytimes.com/2023/03/08/opinion/noam-chomsky-chatgpt-ai.html (дата звернення: 08.05.2024).

Marcus G., Devis E. Rebooting AI: Building Artificial Intelligence We Can Trust. Pantheon, 2019. 273 p.

Mitchell M. Artificial Intelligence: A Guide for Thinking Humans. Farrar, Straus and Giroux, 2019. 336 p.

LeCun Y. Self-Supervised Learning: The Dark Matter of Intelligence. 2020. URL: https://ai.meta.com/blog/self-supervised-learning-the-dark-matter-of-intelligence/ (дата звернення: 16.07.2024).

Attention is all you need / A. Vaswani et al. NIPS 2017 : 31st Conference on Neural Information Processing Systems, Long Beach, CA, USA, 5-10 December 2017. Pp. 1-11. DOI: https://doi.org/10.48550/arXiv.1706.03762.

BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding / Devlin J., Chang M.-W., Lee K., Toutanova K. Proceedings of NAACL-HLT 2019, Minneapolis, Minnesota, 2 June – 7 June 2019. Pp. 4171-4186. DOI: https://doi.org/10.48550/arXiv.1810.04805.

Language Models are Few-Shot Learners / Brown T. et al. NIPS'20: Proceedings of the 34th International Conference on Neural Information Processing Systems, Vancouver, Canada, 6-12 December 2020. Vol. 33. Pp. 1877-1901. DOI: https://doi.org/10.48550/arXiv.2005.14165.

Understanding and improving Layer Normalization / J. Xu et al. NeurIPS 2019 : 33rd Conference on Neural Information Processing Systems, Vancouver, Canada, 8-14 December 2019. Pp. 1-11. DOI: https://doi.org/10.48550/arXiv.1911.07013.

Markoff J. Machines of Loving Grace: The Quest for Common Ground Between Humans and Robots. Ecco, 2015. 400 p.

Kurzweil R. The Singularity is Near. Viking, 2005. 652 p.

Рrigogine I., George C. The Second Law as a Selection Principle: The Microscopic Theory of Dissipative Processes in Quantum Systems. Proceeding of the National Academy of Science. 1983. Vol. 80. Pp. 4590-45945. DOI: https://doi.org/10.1073/pnas.80.14.4590.

##submission.downloads##

Опубліковано

Як цитувати

Номер

Розділ

Ліцензія

Ця робота ліцензується відповідно до Creative Commons Attribution 4.0 International License.

Журнал "Вісник Приазовського державного технічного університету. Серія: Технічні науки" видається під ліцензією СС-BY (Ліцензія «Із зазначенням авторства»).

Дана ліцензія дозволяє поширювати, редагувати, поправляти і брати твір за основу для похідних навіть на комерційній основі із зазначенням авторства. Це найзручніша з усіх пропонованих ліцензій. Рекомендується для максимального поширення і використання неліцензійних матеріалів.

Автори, які публікуються в цьому журналі, погоджуються з наступними умовами:

1. Автори залишають за собою право на авторство своєї роботи та передають журналу право першої публікації цієї роботи на умовах ліцензії Creative Commons Attribution License, яка дозволяє іншим особам вільно розповсюджувати опубліковану роботу з обов'язковим посиланням на авторів оригінальної роботи та першу публікацію роботи в цьому журналі.

2. Автори мають право укладати самостійні додаткові угоди, які стосуються неексклюзивного поширення роботи в тому вигляді, в якому вона була опублікована цим журналом (наприклад, розміщувати роботу в електронному сховищі установи або публікувати у складі монографії), за умови збереження посилання на першу публікацію роботи в цьому журналі.