Enhancing 3D scene understanding via text annotations

DOI:

https://doi.org/10.15587/1729-4061.2025.323757Keywords:

large language models, vision language models, multimodal learning, spatial understandingAbstract

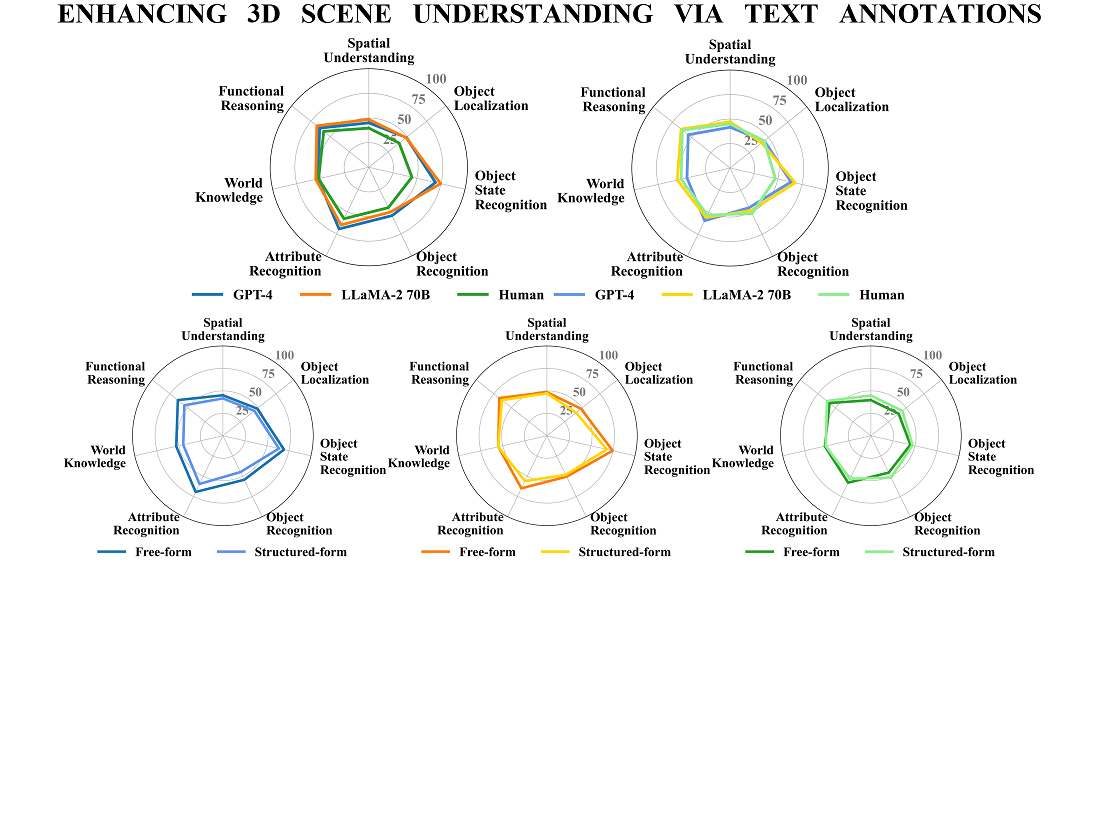

The object of this study is the use of text annotations as a form of 3D scene representation. The paper investigates the task of integrating large-scale language models (LLMs) into complex 3D environments. Using the Embodied Question Answering task as an example, we analyze different types of scene annotations and evaluate the performance of LLMs on a subset of test episodes from the OpenEQA dataset. The aim of the study was to evaluate the effectiveness of textual scene descriptions compared to visual data for solving EQA tasks. The methodology implied estimating the optimal context length for scene annotations, measuring the differences between free-form and structured annotations, as well as analyzing the impact of model size on performance, and comparing model results with the level of human comprehension of scene annotations. The results showed that detailed descriptions that include a list of objects, clearly described attributes, spatial relationships, and potential interactions improve EQA performance, even outperforming the most advanced method in OpenEQA – GPT-4V (57.6 % and 58.5 % for GPT-4 and LLaMA-2, respectively, vs. 55.3 % for GPT-4V). The results can be explained by the high level of detail that provides the model with contextually clear and interpretable information about the scene. The optimal context length for textual descriptions was estimated to be 1517 tokens. Nevertheless, even the best textual representations do not reach the level of perception and reasoning demonstrated by humans when presented with visual data (50 % for textual data vs. 86.8 % for video). The results are important for the development of multimodal models for Embodied AI tasks, including Robotics and Autonomous Navigation Systems. They could also be used to improve user interaction with three-dimensional spaces in the field of virtual or augmented reality

References

- Vaswani, A., Shazeer, N. M., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N. et al. (2017). Attention is All you Need. NIPS'17: Proceedings of the 31st International Conference on Neural Information Processing Systems. Available at: https://dl.acm.org/doi/10.5555/3295222.3295349

- Achiam, J., Adler, S., Agarwal, S., Ahmad, L., Akkaya, I., Aleman, F. L. (2023). Gpt-4 technical report. ArXiv. https://doi.org/10.48550/arXiv.2303.08774

- Gpt-4v(ision) technical work and authors. OpenAI. Available at: https://openai.com/contributions/gpt-4v

- . Gpt-4v(ision) system card. OpenAI. Available at: https://openai.com/index/gpt-4v-system-card/

- Yang, Z., Li, L., Lin, K., Wang, J., Lin, C., Liu, Z., Wang, L. (2023). The Dawn of LMMs: Preliminary Explorations with GPT-4V(ision). ArXiv. https://doi.org/10.48550/arXiv.2309.17421

- Touvron, H., Martin, L., Stone, K., Albert, P., Almahairi, A., Babaei, Y. et al. (2023). Llama 2: Open Foundation and Fine-Tuned Chat Models. ArXiv. https://doi.org/10.48550/arXiv.2307.09288

- Annepaka, Y., Pakray, P. (2024). Large language models: a survey of their development, capabilities, and applications. Knowledge and Information Systems, 67 (3), 2967–3022. https://doi.org/10.1007/s10115-024-02310-4

- Wei, J., Tay, Y., Bommasani, R., Raffel, C., Zoph, B., Borgeaud, S. et al. (2022). Emergent Abilities of Large Language Models. ArXiv. https://doi.org/10.48550/arXiv.2206.07682

- Ahn, M., Brohan, A., Brown, N., Chebotar, Y., Cortes, O., David, B. Et al. (2022). Do As I Can, Not As I Say: Grounding Language in Robotic Affordances. Conference on Robot Learning. ArXiv. https://doi.org/10.48550/arXiv.2204.01691

- Huang, W., Xia, F., Xiao, T., Chan, H., Liang, J., Florence, P. et al. (2023). Inner monologue: Embodied reasoning through planning with language models. ArXiv. https://doi.org/10.48550/arXiv.2207.05608

- Chen, B., Xia, F., Ichter, B., Rao, K., Gopalakrishnan, K., Ryoo, M. S. et al. (2023). Open-vocabulary Queryable Scene Representations for Real World Planning. 2023 IEEE International Conference on Robotics and Automation (ICRA). https://doi.org/10.1109/icra48891.2023.10161534

- Zhu, D., Chen, J., Shen, X., Li, X., Elhoseiny, M. (2023). MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. ArXiv. https://doi.org/10.48550/arXiv.2304.10592

- Chen, J., Zhu, D., Shen, X., Li, X., Liu, Z., Zhang, P., Krishnamoorthi, R. et al. (2023). MiniGPT-v2: large language model as a unified interface for vision-language multi-task learning. ArXiv. https://doi.org/10.48550/arXiv.2310.09478

- Liu, H., Li, C., Wu, Q., Lee, Y. J. (2023). Visual instruction tuning. NIPS '23: Proceedings of the 37th International Conference on Neural Information Processing Systems. Available at: https://dl.acm.org/doi/10.5555/3666122.3667638

- Liu, H., Li, C., Li, Y., Lee, Y. J. (2024). Improved Baselines with Visual Instruction Tuning. 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 26286–26296. https://doi.org/10.1109/cvpr52733.2024.02484

- Driess, D., Xia, F., Sajjadi, M. S. M., Lynch, C., Chowdhery, A., Ichter, B. et al. (2023). PaLM-E: An embodied multimodal language model. ICML'23: Proceedings of the 40th International Conference on Machine Learning. Available at: https://dl.acm.org/doi/10.5555/3618408.3618748

- Majumdar, A., Ajay, A., Zhang, X., Putta, P., Yenamandra, S., Henaff, M. et al. (2024). OpenEQA: Embodied Question Answering in the Era of Foundation Models. 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 16488–16498. https://doi.org/10.1109/cvpr52733.2024.01560

- Das, A., Datta, S., Gkioxari, G., Lee, S., Parikh, D., Batra, D. (2018). Embodied Question Answering. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), 2135–213509. https://doi.org/10.1109/cvprw.2018.00279

- Dai, A., Chang, A. X., Savva, M., Halber, M., Funkhouser, T., Niessner, M. (2017). ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2432–2443. https://doi.org/10.1109/cvpr.2017.261

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Ruslan Partsey, Vasyl Teslyuk, Oleksandr Maksymets, Vladyslav Humennyy, Volodymyr Kuzma

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.