Identifying the impact of Metaperceptron in optimizing neural networks: a comparative study of gradient descent and metaheuristic approaches

DOI:

https://doi.org/10.15587/1729-4061.2025.326955Keywords:

Metaperceptron, neural networks, gradient descent, metaheuristic algorithms, optimizationAbstract

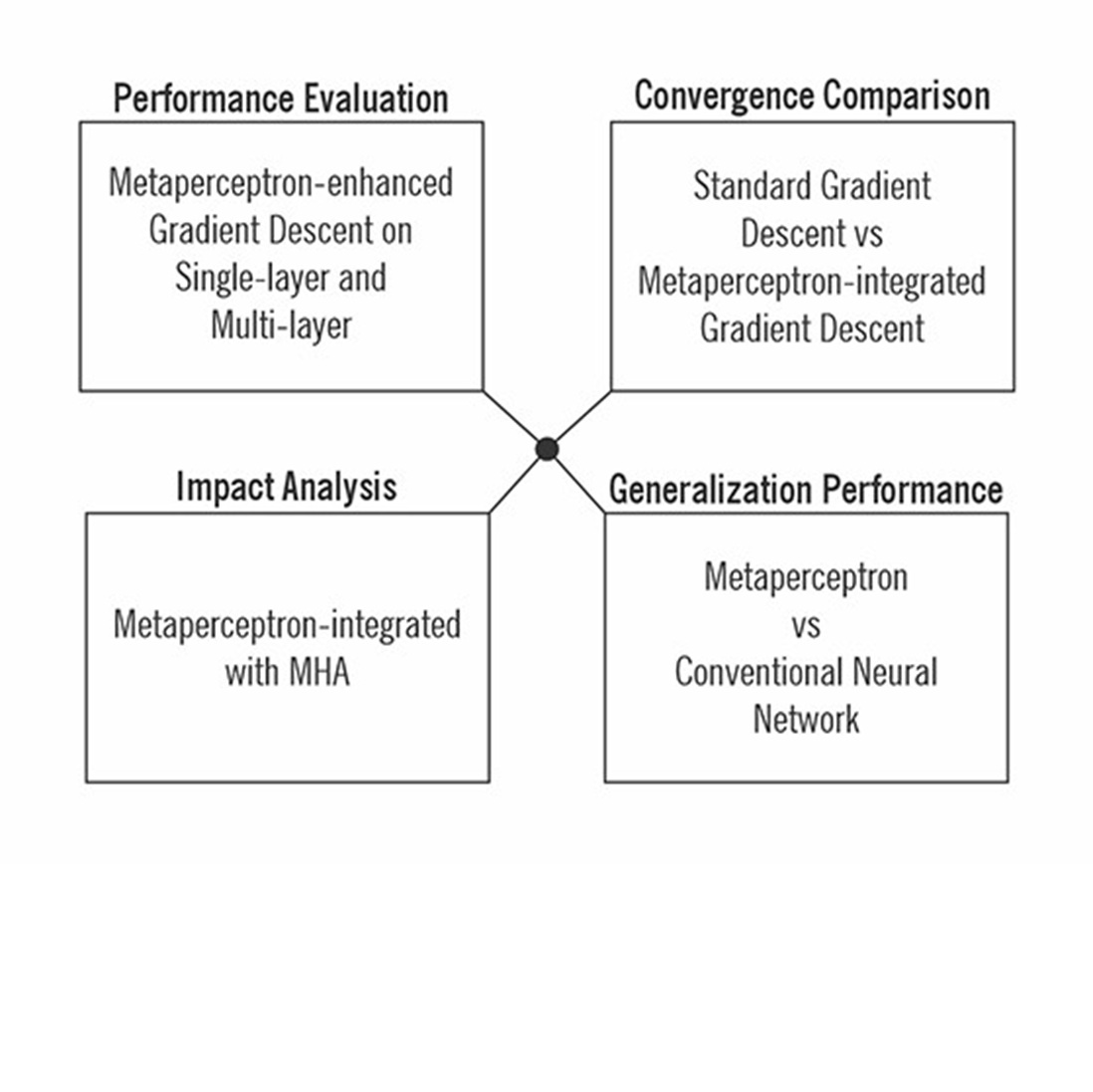

This study investigates the application of the Metaperceptron framework as an adaptive optimization mechanism in training neural networks for polycystic ovary syndrome (PCOS) diagnosis. The research addresses the persistent challenges in conventional optimization methods such as slow convergence, local minima entrapment, and hyperparameter sensitivity that hinder the efficiency and generalization capability of artificial neural networks. By integrating Metaperceptron with both gradient descent (GD) and genetic algorithm (GA), this work demonstrates significant improvements in convergence speed and diagnostic accuracy. Specifically, Metaperceptron-enhanced GD reduced convergence time by nearly 40% while maintaining high accuracy (0.8950 for single-layer neural network and 0.9100 for multi-layer neural network). These results were achieved through dynamic learning rate adjustment and meta-level control over search strategies, enabling better exploration-exploitation balance during training. The findings are explained by the framework’s ability to adaptively respond to gradient landscapes and dataset characteristics, offering a more stable and efficient optimization process. Practical implementation of the proposed method is feasible under conditions where data quality and representativeness are ensured, particularly in medical diagnostics and other domains involving imbalanced or noisy datasets

References

- Dastres, R., Soori, M. (2021). Artificial Neural Network Systems. International Journal of Imaging and Robotics (IJIR), 21 (2), 13–25.

- Montesinos López, O. A., Montesinos López, A., Crossa, J. (2022). Fundamentals of Artificial Neural Networks and Deep Learning. Multivariate Statistical Machine Learning Methods for Genomic Prediction, 379–425. https://doi.org/10.1007/978-3-030-89010-0_10

- Luchia, N. T., Tasia, E., Ramadhani, I., Rahmadeyan, A., Zahra, R. (2024). Performance Comparison Between Artificial Neural Network, Recurrent Neural Network and Long Short-Term Memory for Prediction of Extreme Climate Change. Public Research Journal of Engineering, Data Technology and Computer Science, 1 (2), 62–70. https://doi.org/10.57152/predatecs.v1i2.864

- Harumy, T. H. F., Zarlis, M., Effendi, S., Lidya, M. S. (2021). Prediction Using A Neural Network Algorithm Approach (A Review). 2021 International Conference on Software Engineering & Computer Systems and 4th International Conference on Computational Science and Information Management (ICSECS-ICOCSIM), 325–330. https://doi.org/10.1109/icsecs52883.2021.00066

- You, X., Wu, X. (2021). Exponentially Many Local Minima in Quantum Neural Networks. arXiv. https://doi.org/10.48550/arXiv.2110.02479

- Friedrich, L., Maziero, J. (2022). Avoiding barren plateaus with classical deep neural networks. Physical Review A, 106 (4). https://doi.org/10.1103/physreva.106.042433

- Santos, C. F. G. D., Papa, J. P. (2022). Avoiding Overfitting: A Survey on Regularization Methods for Convolutional Neural Networks. ACM Computing Surveys, 54 (10s), 1–25. https://doi.org/10.1145/3510413

- Gaspar, A., Oliva, D., Cuevas, E., Zaldívar, D., Pérez, M., Pajares, G. (2021). Hyperparameter Optimization in a Convolutional Neural Network Using Metaheuristic Algorithms. Metaheuristics in Machine Learning: Theory and Applications, 37–59. https://doi.org/10.1007/978-3-030-70542-8_2

- Van Thieu, N., Nguyen, N. H., Sherif, M., El-Shafie, A., Ahmed, A. N. (2024). Integrated metaheuristic algorithms with extreme learning machine models for river streamflow prediction. Scientific Reports, 14 (1). https://doi.org/10.1038/s41598-024-63908-w

- Harumy, T., Ginting, D. S. Br. (2021). Neural Network Enhancement Forecast of Dengue Fever Outbreaks in Coastal Region. Journal of Physics: Conference Series, 1898 (1), 012027. https://doi.org/10.1088/1742-6596/1898/1/012027

- Cong, S., Zhou, Y. (2022). A review of convolutional neural network architectures and their optimizations. Artificial Intelligence Review, 56 (3), 1905–1969. https://doi.org/10.1007/s10462-022-10213-5

- Abdolrasol, M. G. M., Hussain, S. M. S., Ustun, T. S., Sarker, M. R., Hannan, M. A., Mohamed, R. et al. (2021). Artificial Neural Networks Based Optimization Techniques: A Review. Electronics, 10 (21), 2689. https://doi.org/10.3390/electronics10212689

- Abd Elaziz, M., Dahou, A., Abualigah, L., Yu, L., Alshinwan, M., Khasawneh, A. M., Lu, S. (2021). Advanced metaheuristic optimization techniques in applications of deep neural networks: a review. Neural Computing and Applications, 33 (21), 14079–14099. https://doi.org/10.1007/s00521-021-05960-5

- Reyad, M., Sarhan, A. M., Arafa, M. (2023). A modified Adam algorithm for deep neural network optimization. Neural Computing and Applications, 35 (23), 17095–17112. https://doi.org/10.1007/s00521-023-08568-z

- Awasthi, P., Das, A., Gollapudi, S. (2021). A Convergence Analysis of Gradient Descent on Graph Neural Networks. Advances in Neural Information Processing Systems 34 (NeurIPS 2021).

- Yang, G., Hu, E. J., Babuschkin, I., Sidor, S., Liu, X., Farhi, D. et al. (2022). Tensor Programs V: Tuning Large Neural Networks via Zero-Shot Hyperparameter Transfer. arXiv. https://doi.org/10.48550/arXiv.2203.03466

- Abdullah, D., Gartsiyanova, K., Mansur qizi, K., Javlievich, E. A., Bulturbayevich, M. B., Zokirova, G., Nordin, M. N. (2023). An artificial neural networks approach and hybrid method with wavelet transform to investigate the quality of Tallo River, Indonesia. Caspian Journal of Environmental Sciences, 21 (3), 647–656.

- Damian, A., Lee, J. D., Soltanolkotabi, M., Loh, P.-L., Raginsky, M. (2022). Neural Networks can Learn Representations with Gradient Descent. Proceedings of Thirty Fifth Conference on Learning Theory.

- Eker, E., Kayri, M., Ekinci, S., İzci, D. (2023). Comparison of Swarm-based Metaheuristic and Gradient Descent-based Algorithms in Artificial Neural Network Training. ADCAIJ: Advances in Distributed Computing and Artificial Intelligence Journal, 12 (1), e29969. https://doi.org/10.14201/adcaij.29969

- Khan, M. S., Jabeen, F., Ghouzali, S., Rehman, Z., Naz, S., Abdul, W. (2021). Metaheuristic Algorithms in Optimizing Deep Neural Network Model for Software Effort Estimation. IEEE Access, 9, 60309–60327. https://doi.org/10.1109/access.2021.3072380

- Thieu, N. V., Mirjalili, S., Garg, H., Hoang, N. T. (2025). MetaPerceptron: A standardized framework for metaheuristic-driven multi-layer perceptron optimization. Computer Standards & Interfaces, 93, 103977. https://doi.org/10.1016/j.csi.2025.103977

- Malik, A., Tikhamarine, Y., Souag-Gamane, D., Kisi, O., Pham, Q. B. (2020). Support vector regression optimized by meta-heuristic algorithms for daily streamflow prediction. Stochastic Environmental Research and Risk Assessment, 34 (11), 1755–1773. https://doi.org/10.1007/s00477-020-01874-1

- Gharoun, H., Momenifar, F., Chen, F., Gandomi, A. H. (2024). Meta-learning Approaches for Few-Shot Learning: A Survey of Recent Advances. ACM Computing Surveys, 56 (12), 1–41. https://doi.org/10.1145/3659943

- Bassey, J., Qian, L., Li, X. (2021). A Survey of Complex-Valued Neural Networks. arXiv. https://doi.org/10.48550/arXiv.2101.12249

- Sun, S., Gao, H. (2023). Meta-AdaM: A Meta-Learned Adaptive Optimizer with Momentum for Few-Shot Learning. 37th Conference on Neural Information Processing Systems.

- Huda, N., Windiarti, I. S. (2024). Reinforcement learning and meta-learning perspectives frameworks for future medical imaging. Bulletin of Social Informatics Theory and Application, 8 (2), 271–279. https://doi.org/10.31763/businta.v8i2.741

- Khoramnejad, F., Hossain, E. (2025). Generative AI for the Optimization of Next-Generation Wireless Networks: Basics, State-of-the-Art, and Open Challenges. IEEE Communications Surveys & Tutorials, 1–1. https://doi.org/10.1109/comst.2025.3535554

- Kaveh, M., Mesgari, M. S. (2022). Application of Meta-Heuristic Algorithms for Training Neural Networks and Deep Learning Architectures: A Comprehensive Review. Neural Processing Letters, 55 (4), 4519–4622. https://doi.org/10.1007/s11063-022-11055-6

- Maitanmi, O. S., Ogunyolu, O. A., Kuyoro, A. O. (2024). Evaluation of Financial Credit Risk Management Models Based on Gradient Descent and Meta-Heuristic Algorithms. Ingénierie Des Systèmes d Information, 29 (4), 1441–1452. https://doi.org/10.18280/isi.290417

- Rohlfs, C. (2025). Generalization in neural networks: A broad survey. Neurocomputing, 611, 128701. https://doi.org/10.1016/j.neucom.2024.128701

- Harumy, T. H. F., Ginting, D. S. B., Manik, F. Y. (2024). Innovation using hybrid deep neural network detects sensitive ingredients in food products. Proceedings Of The 6th International Conference On Computing And Applied Informatics 2022, 2987, 020018. https://doi.org/10.1063/5.0200199

- Li, T., Yan, Z., Chen, Y., Tan, T. (2025). In-Sensor Multisensory Integrative Perception. https://doi.org/10.2139/ssrn.5128520

- Wang, X., Ptitcyn, G., Asadchy, V. S., Díaz-Rubio, A., Mirmoosa, M. S., Fan, S., Tretyakov, S. A. (2020). Nonreciprocity in Bianisotropic Systems with Uniform Time Modulation. Physical Review Letters, 125 (26). https://doi.org/10.1103/physrevlett.125.266102

- Harumy, T. H. F., Ginting, D. S. B., Handrizal, Albana, M. F., Jamesie, A. B., Patrecella, R. P. (2024). Analysis of potential hazards at the sea with artificial neural network and accident prevention with SOS smart system innovation. Proceedings Of The 6th International Conference On Computing And Applied Informatics 2022, 2987, 020037. https://doi.org/10.1063/5.0200202

- Verma, P., Maan, P., Gautam, R., Arora, T. (2024). Unveiling the Role of Artificial Intelligence (AI) in Polycystic Ovary Syndrome (PCOS) Diagnosis: A Comprehensive Review. Reproductive Sciences, 31 (10), 2901–2915. https://doi.org/10.1007/s43032-024-01615-7

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Darwin Darwin, Tengku Henny Febriana Harumy, Syahril Efendi, Carles Juliandy, Binarwan Halim

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.