Formalization of text prompts to artificial intelligence systems

DOI:

https://doi.org/10.15587/1729-4061.2025.335473Keywords:

prompt formalization, large language models, artificial intelligence, structured templates, wargamesAbstract

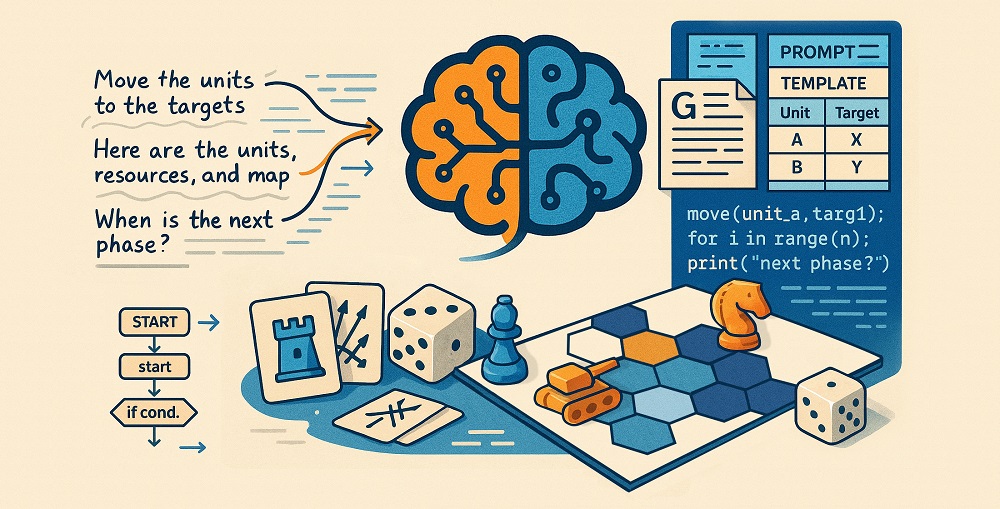

This study's object is the process that formalizes text prompts to large language models of artificial intelligence for the purpose of automatically generating action cards for hexagonal tabletop wargames. The task relates to the ambiguity (42% of terms are misinterpreted), contextual incompleteness (37%), and syntactic variability (21%) of natural language prompts, resulting in unhelpful and unpredictable responses.

To address this problem, a conceptual-practical model has been proposed, which combines structured prompt templates, a localized glossary of key terms, as well as clear instructions on response format.

Practical verification was carried out by generating a set of action cards for solo wargames on modern large language models by generating over 100 prompts and analyzing more than 300 responses. The experiments demonstrated that the formalized prompts reduced the total error rate by 58%, as well as increased the relevance of responses from 55–65% to 88–92%. The average time for preparing prompts was reduced by 25–40%. The “d6-table” templates ensured the stability of the output format in 90–95% of cases while JSON structures provided stability in 85–90% of cases. The glossary and structure definition integrated into the prompts minimized semantic discrepancies and syntactic errors.

A special feature of the proposed template structures for prompts is adaptability to different subject areas through the use of a description language specific to each of these areas. The research results have practical value for automating game content development processes and could be adapted for other subject areas where accuracy, consistency, and structure of language model responses are important.

The proposed systematic approach facilitates the automation of complex content development with a guaranteed increase in the quality and predictability of responses from large language models.

References

- Poola, I. (2023). Overcoming ChatGPTs inaccuracies with Pre-Trained AI Prompt Engineering Sequencing Process. International Journal of Technology and Emerging Sciences, 3 (3), 16–19. Available at: https://www.researchgate.net/profile/Indrasen-Poola/publication/374153552_Overcoming_ChatGPTs_inaccuracies_with_Pre-Trained_AI_Prompt_Engineering_Sequencing_Process/links/65109c34c05e6d1b1c2d6ae9/Overcoming-ChatGPTs-inaccuracies-with-Pre-Trained-AI-Prompt-Engineering-Sequencing-Process.pdf

- Prompt engineering. OpenAI. Available at: https://platform.openai.com/docs/guides/text#prompt-engineering

- Diab, M., Herrera, J., Chernow, B., Chernow, B., Mao, C. (2022). Stable Diffusion Prompt Book. OpenArt. Available at: https://cdn.openart.ai/assets/Stable%20Diffusion%20Prompt%20Book%20From%20OpenArt%2011-13.pdf

- Shah, C. (2025). From Prompt Engineering to Prompt Science with Humans in the Loop. Communications of the ACM. https://doi.org/10.1145/3709599

- Kojima, T., Gu, S. S., Reid, M., Matsuo, Y., Iwasawa, Y. (2022). Large Language Models are Zero-Shot Reasoners. arXiv. https://doi.org/10.48550/arXiv.2205.11916

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N. et al. (2017). Attention Is All You Need. arXiv. https://doi.org/10.48550/arXiv.1706.03762

- Liu, P., Yuan, W., Fu, J., Jiang, Z., Hayashi, H., Neubig, G. (2023). Pre-train, Prompt, and Predict: A Systematic Survey of Prompting Methods in Natural Language Processing. ACM Computing Surveys, 55 (9), 1–35. https://doi.org/10.1145/3560815

- Wei, J., Zhou, D. (2022). Language Models Perform Reasoning via Chain of Thought. Google Research. Available at: https://research.google/blog/language-models-perform-reasoning-via-chain-of-thought/

- Zaghir, J., Naguib, M., Bjelogrlic, M., Névéol, A., Tannier, X., Lovis, C. (2024). Prompt Engineering Paradigms for Medical Applications: Scoping Review. Journal of Medical Internet Research, 26, e60501. https://doi.org/10.2196/60501

- Pawar, V., Gawande, M., Kollu, A., Bile, A. S. (2024). Exploring the Potential of Prompt Engineering: A Comprehensive Analysis of Interacting with Large Language Models. 2024 8th International Conference on Computing, Communication, Control and Automation (ICCUBEA), 1–9. https://doi.org/10.1109/iccubea61740.2024.10775016

- Prompt engineering: overview and guide. Google Cloud. Available at: https://cloud.google.com/discover/what-is-prompt-engineering

- Sui, Y., Zhou, M., Zhou, M., Han, S., Zhang, D. (2024). Table Meets LLM: Can Large Language Models Understand Structured Table Data? A Benchmark and Empirical Study. Proceedings of the 17th ACM International Conference on Web Search and Data Mining, 645–654. https://doi.org/10.1145/3616855.3635752

- Crabtree, M. (2024). What is Prompt Engineering? A Detailed Guide For 2025. DataCamp. Available at: https://www.datacamp.com/blog/what-is-prompt-engineering-the-future-of-ai-communication

- Kryazhych, O., Vasenko, O., Isak, L., Babak, O., Grytsyshyn, V. (2024). Method of constructing requests to chat-bots on the base of artificial intelligence. International Scientific Technical Journal "Problems of Control and Informatics", 69 (2), 84–96. https://doi.org/10.34229/1028-0979-2024-2-7

- Wang, D. Y.-B., Shen, Z., Mishra, S. S., Xu, Z., Teng, Y., Ding, H. (2025). SLOT: Structuring the Output of Large Language Models. arXiv. https://doi.org/10.48550/arXiv.2505.04016

- Shorten, C., Pierse, C., Smith, T. B., Cardenas, E., Sharma, A., Trengrove, J., van Luijt, B. (2024). StructuredRAG: JSON Response Formatting with Large Language Models. arXiv. https://doi.org/10.48550/arXiv.2408.11061

- Tang, X., Zong, Y., Phang, J., Zhao, Y., Zhou, W., Cohan, A., Gerstein, M. (2024). Struc-Bench: Are Large Language Models Good at Generating Complex Structured Tabular Data? Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 2: Short Papers), 12–34. https://doi.org/10.18653/v1/2024.naacl-short.2

- Sasaki, Y., Washizaki, H., Li, J., Yoshioka, N., Ubayashi, N., Fukazawa, Y. (2025). Landscape and Taxonomy of Prompt Engineering Patterns in Software Engineering. IT Professional, 27 (1), 41–49. https://doi.org/10.1109/mitp.2024.3525458

- Cotroneo, P., Hutson, J. (2023). Generative AI tools in art education: Exploring prompt engineering and iterative processes for enhanced creativity. Metaverse, 4 (1), 14. https://doi.org/10.54517/m.v4i1.2164

- Hau, K., Hassan, S., Zhou, S. (2025). LLMs in Mobile Apps: Practices, Challenges, and Opportunities. 2025 IEEE/ACM 12th International Conference on Mobile Software Engineering and Systems (MOBILESoft), 3–14. https://doi.org/10.1109/mobilesoft66462.2025.00008

- Sharma, R. K., Gupta, V., Grossman, D. (2024). SPML: A DSL for Defending Language Models Against Prompt Attacks. arXiv. https://arxiv.org/abs/2402.11755

- Mountantonakis, M., Tzitzikas, Y. (2025). Generating SPARQL Queries over CIDOC-CRM Using a Two-Stage Ontology Path Patterns Method in LLM Prompts. Journal on Computing and Cultural Heritage, 18 (1), 1–20. https://doi.org/10.1145/3708326

- Wang, Z., Chakravarthy, A., Munechika, D., Chau, D. H. (2024). Wordflow: Social Prompt Engineering for Large Language Models. Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 3: System Demonstrations), 42–50. https://doi.org/10.18653/v1/2024.acl-demos.5

- Desmond, M., Brachman, M. (2024). Exploring Prompt Engineering Practices in the Enterprise. arXiv. https://doi.org/10.48550/arXiv.2403.08950

- Garcia, M. C., Bondoc, B. C. (2024). Mastering the art of technical writing in it: Making complex things easy to understand in Atate campus. World Journal of Advanced Research and Reviews, 22 (1), 571–579. https://doi.org/10.30574/wjarr.2024.22.1.1020

- Developing AI Literacy With People Who Have Low Or No Digital Skills (2024). Good Things Foundation. Available at: https://www.goodthingsfoundation.org/policy-and-research/research-and-evidence/research-2024/ai-literacy

- Garg, A., Rajendran, R. (2024). The Impact of Structured Prompt-Driven Generative AI on Learning Data Analysis in Engineering Students. Proceedings of the 16th International Conference on Computer Supported Education, 270–277. https://doi.org/10.5220/0012693000003693

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2025 Vladyslav Oliinyk, Andrii Biziuk, Zhanna Deineko, Viktor Chelombitko

This work is licensed under a Creative Commons Attribution 4.0 International License.

The consolidation and conditions for the transfer of copyright (identification of authorship) is carried out in the License Agreement. In particular, the authors reserve the right to the authorship of their manuscript and transfer the first publication of this work to the journal under the terms of the Creative Commons CC BY license. At the same time, they have the right to conclude on their own additional agreements concerning the non-exclusive distribution of the work in the form in which it was published by this journal, but provided that the link to the first publication of the article in this journal is preserved.

A license agreement is a document in which the author warrants that he/she owns all copyright for the work (manuscript, article, etc.).

The authors, signing the License Agreement with TECHNOLOGY CENTER PC, have all rights to the further use of their work, provided that they link to our edition in which the work was published.

According to the terms of the License Agreement, the Publisher TECHNOLOGY CENTER PC does not take away your copyrights and receives permission from the authors to use and dissemination of the publication through the world's scientific resources (own electronic resources, scientometric databases, repositories, libraries, etc.).

In the absence of a signed License Agreement or in the absence of this agreement of identifiers allowing to identify the identity of the author, the editors have no right to work with the manuscript.

It is important to remember that there is another type of agreement between authors and publishers – when copyright is transferred from the authors to the publisher. In this case, the authors lose ownership of their work and may not use it in any way.